rm(list=ls())8 Remote sensing

8.1 Introduction

Remote sensing techniques, in the sense of gathering & processing of data by a device separated from the object under study, are increasingly providing an important component of the set of technologies available for the study of vegetation systems and their functioning. This is in spite that many applications only provide indirect estimations of the biophysical variables of interest (Jones and Vaughan 2010).

Particular advantages of remote sensing for vegetation studies are that: (i) it is non-contact and non-destructive; and (ii) observations are easily extrapolated to larger scales. Even at the plant scale, remotely sensed imagery is advantageous as it allows rapid sampling of large number of plants (Jones and Vaughan 2010).

This chapter aims at providing a conceptual & practical approach to apply remote sensing data and techniques to infer information useful for monitoring crop diseases. The structure of this chapter is divided into four sections. The first one introduces basic remote sensing concepts and provides a summary of applications of remote sensing of crop diseases. The second one illustrates a case study focused on identification of banana Fusarium wilt from multispectral UAV imagery. The third one illustrates a case study dealing with estimation of cercospora leaf spot disease on table beet. Finally, it concludes with several reflections about potential and limitations of this technology.

8.2 Remote sensing background

8.2.1 Optical remote sensing

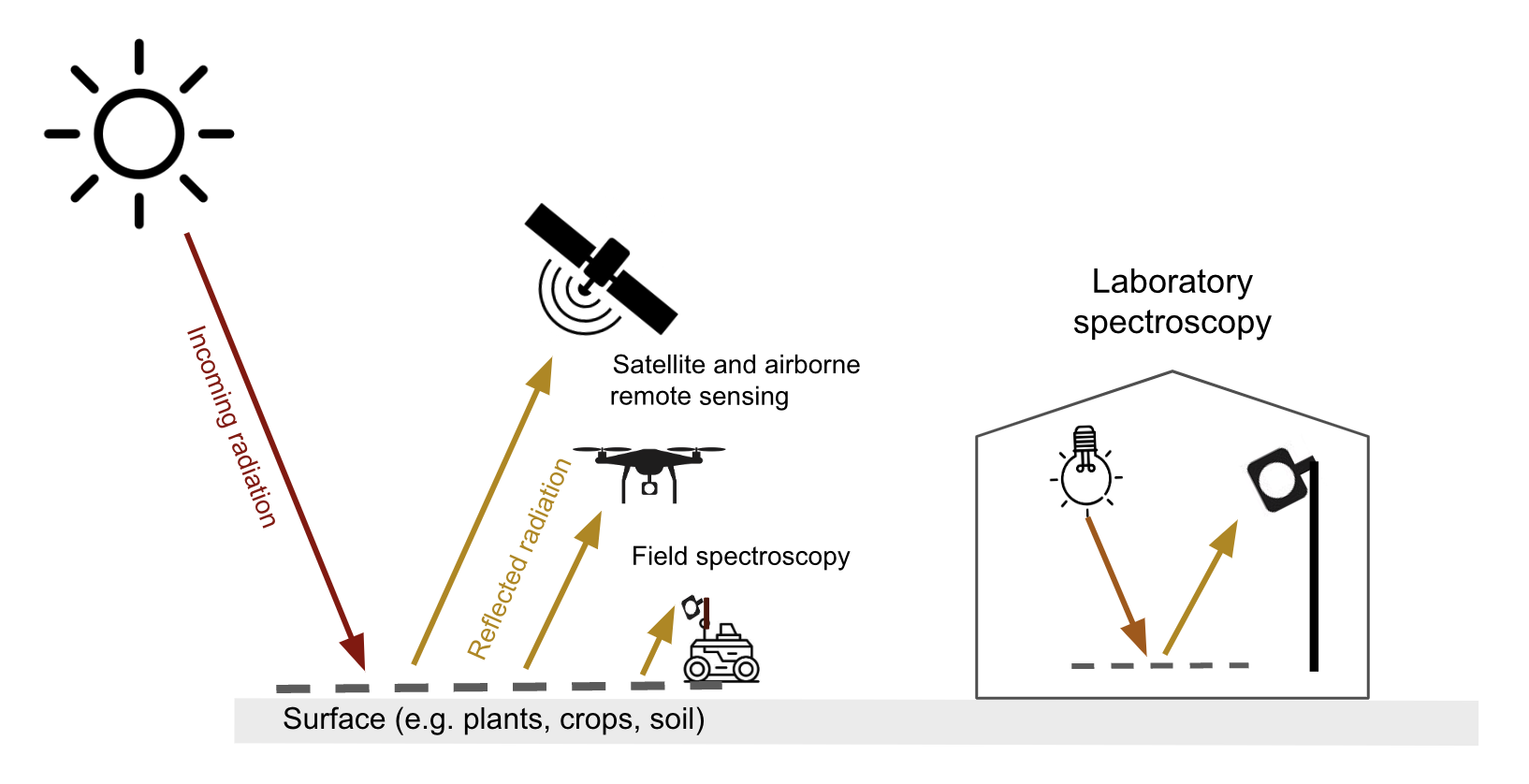

Optical remote sensing makes use of the radiation reflected by a surface in the visible (~400-700 nm), the near infrared (700-1300 nm) and shortwave infrared (1300-~3000 nm) parts of the electromagnetic spectrum. Spaceborne & airborne-based remote sensing and field spectroscopy utilize the solar radiation as an illumination source. Lab spectroscopy utilizes a lamp as an artificial illumination source Figure 8.1.

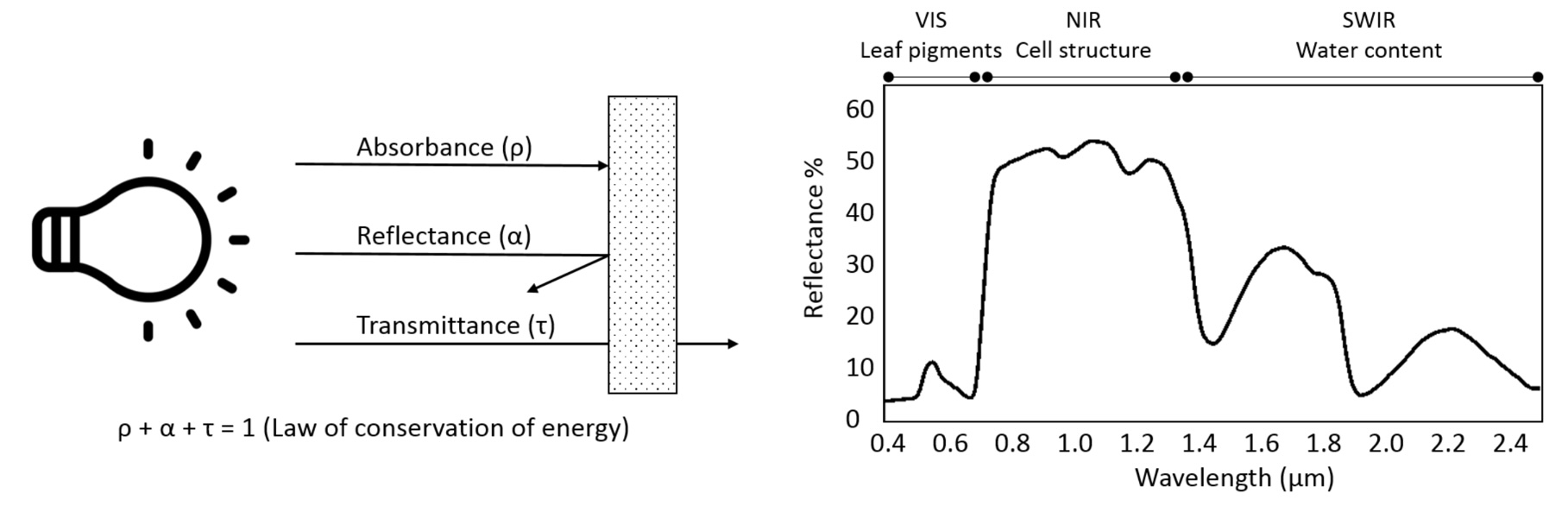

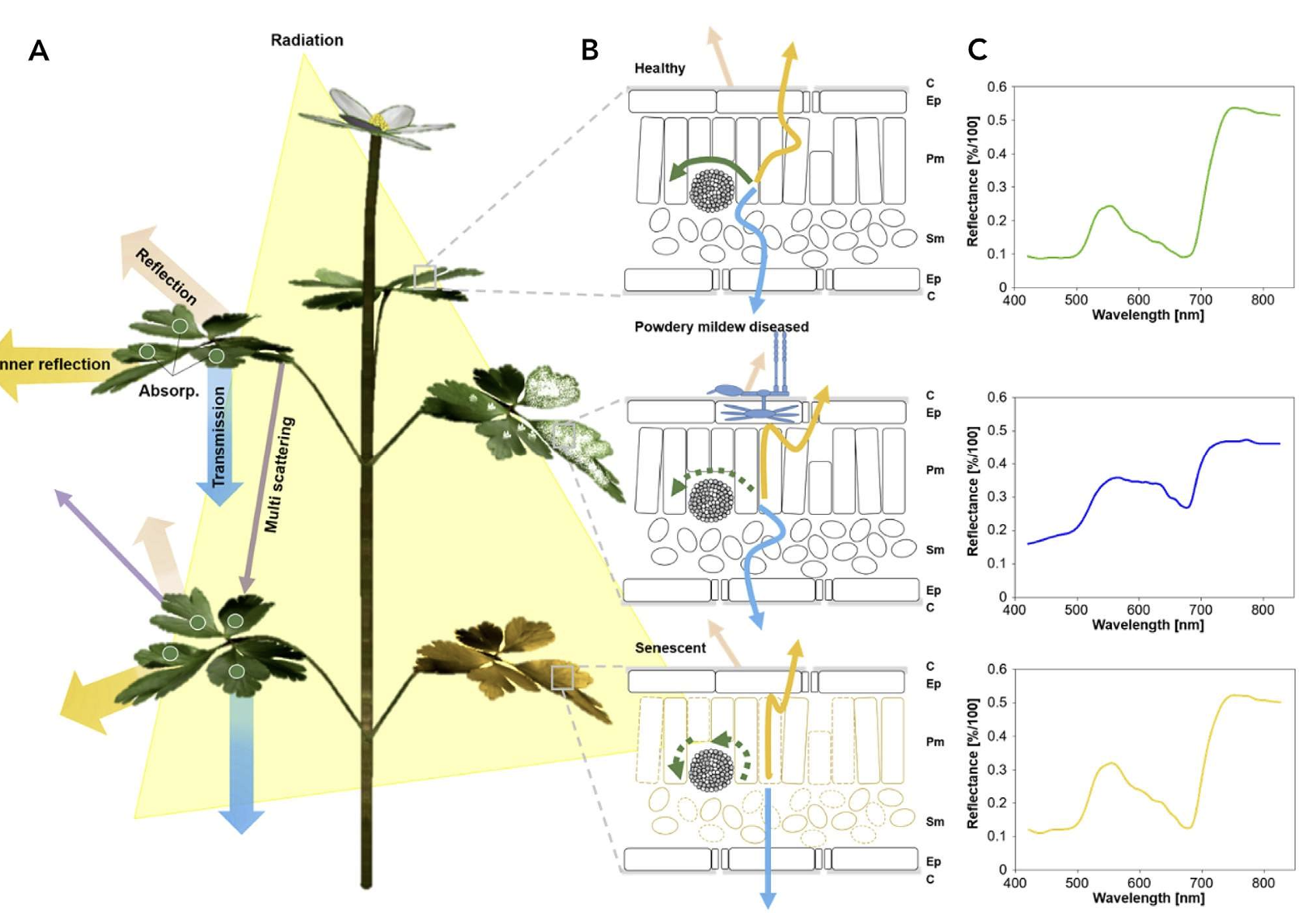

The proportion of the radiation reflected by a surface depends on the surface’s spectral reflection, absorption and transmission properties and varies with wavelength Figure 8.2. These spectral properties in turn depend on the surface’s physical and chemical constituents Figure 8.2. Measuring the reflected radiation hence allows us to draw conclusions on a surface’s characteristic, which is the basic principle behind optical remote sensing.

8.2.2 Vegetation spectral properties

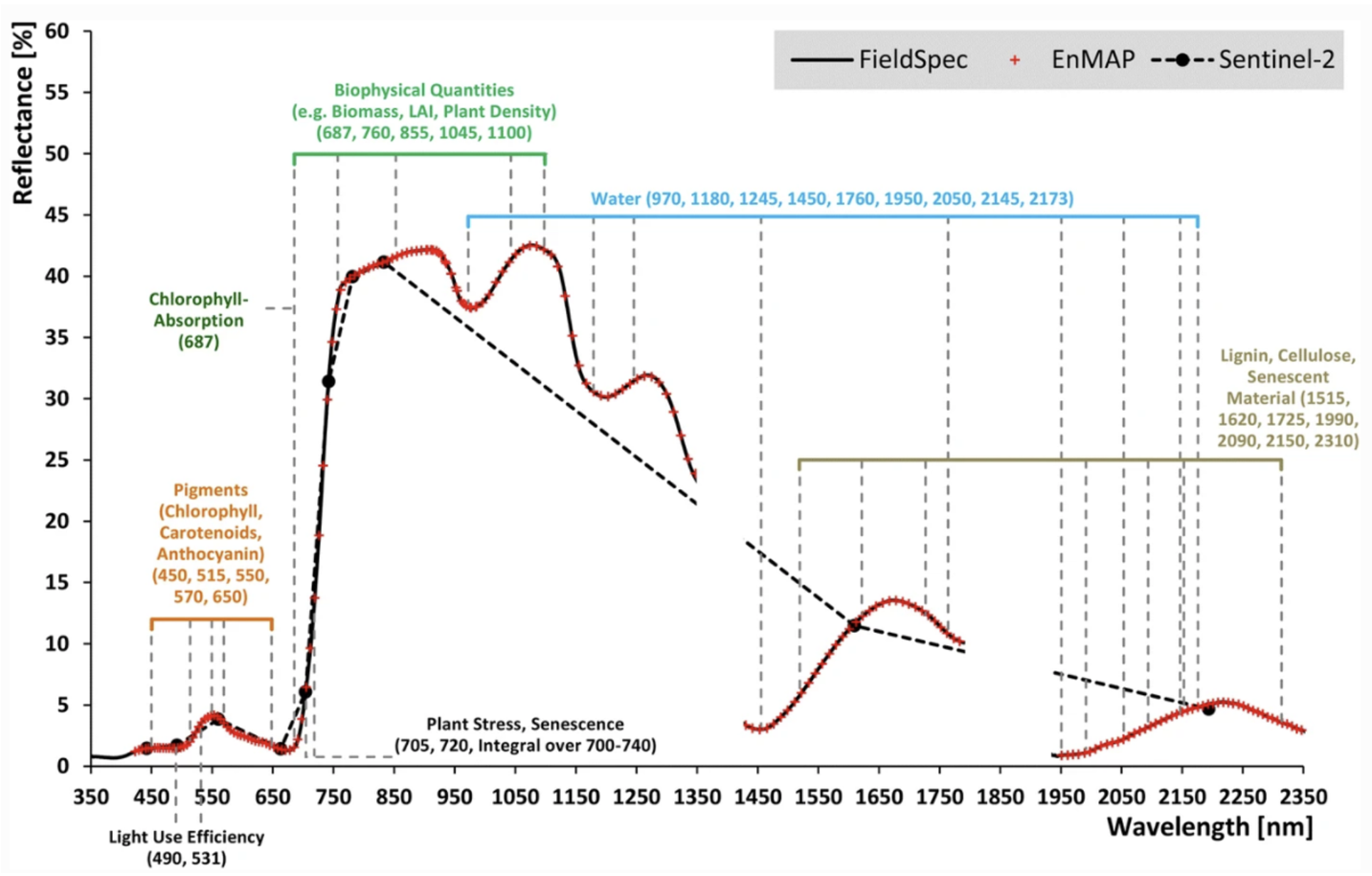

Optical remote sensing enables the deduction of various vegetation-related characteristics, including biochemical properties (e.g., pigments, water content), structural properties (e.g., leaf area index (LAI), biomass) or process properties (e.g., light use efficiency (LUE)). The ability to deduce these characteristics depends on the ability of a sensor to resolve vegetation spectra. Hyperspectral sensors capture spectral information in hundreds of narrow and contiguous bands in the VIS, NIR and SWIR, and, thus, resolve subtle absorption features caused by specific vegetation constituents (e.g. anthocyanins, carotenoids, lignin, cellulose, proteins). In contrast, multispectral sensors capture spectral information in a few broad spectral bands and, thus, only resolve broader spectral features. Still, multispectral systems like Sentinel-2 have been demonstrated to be useful to derive valuable vegetation properties (e.g., LAI, chlorophyll).

8.2.3 What measures a remote sensor?

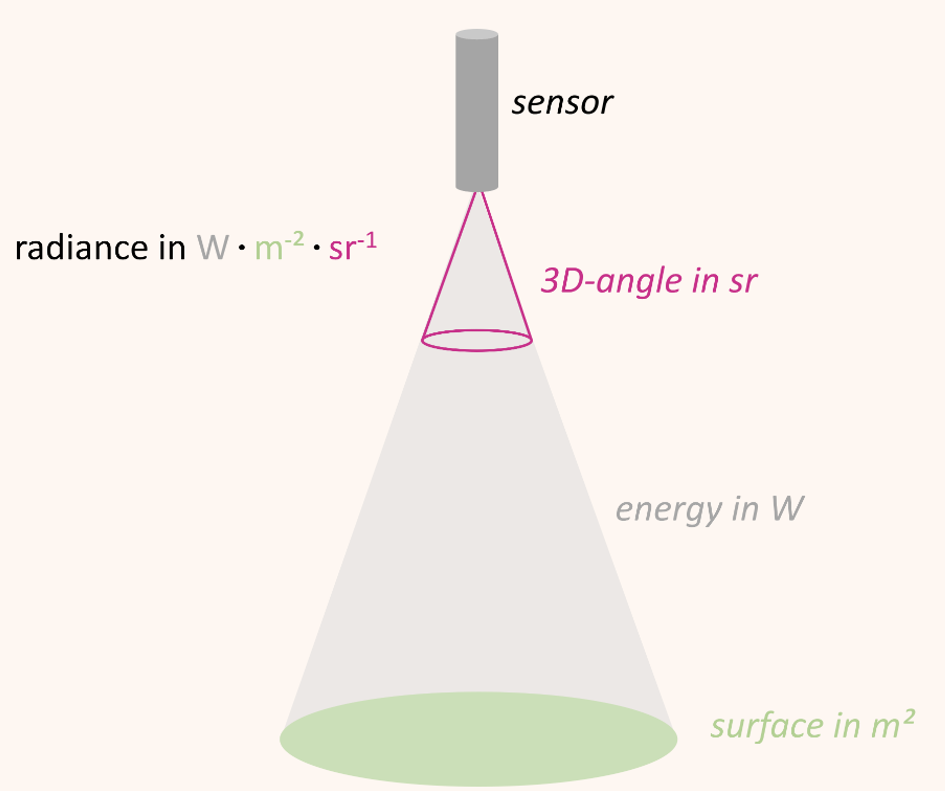

Optical sensors/spectrometers measure the radiation reflected by a surface to a certain solid angle in the physical quantity radiance. The unit of radiance is watts per square meter per steradian (W • m-2 • sr-1) Figure 8.4. In other words, radiance describes the amount of energy (W) that is reflected from a surface (m-2) and arrives at the sensor in a three-dimensional angle (sr-1).

A general problem related to the use of radiance as unit of measurement is the variation of radiance values with illumination. For example, the absolute incoming solar radiation varies over the course of the day as a function of the relative position between sun and surface and so does the absolute amount of radiance measured. We can only compare measurements taken a few hours apart or on different dates when we are putting the measured radiance in relation to the incoming illumination.

The quotient between measured reflected radiance and measured incoming radiance (Radiancereflected / Radianceincoming) is called reflectance (usually denoted as \(\rho\)). Reflectance provides a stable unit of measurement which is independent from illumination and is the percentage of the total measurable radiation, which has not been absorbed or transmitted.

8.2.4 Hyperspectral vs.multispectral imagery

Hyperspectral imaging involves capturing and analyzing data from a large number of narrow, contiguous bands across the electromagnetic spectrum, resulting in a high-resolution spectrum for each pixel in the image. As a result, a hyperspectral camera provides smooth spectra. The spectra provided by multispectral cameras are more like stairs or saw teeth without the ability to depict acute spectral signatures Figure 8.6.

8.2.5 Vegetation Indices

A vegetation index (VI) represents a spectral transformation of two or more bands of spectral imagery into a singleband image. A VI is designed to enhance the vegetation signal with regard to different vegetation properties, while minimizing confounding factors such as soil background reflectance, directional, or atmospheric effects. There are many different VIs, including multispectral broadband indices as well as hyperspectral narrowband indices.

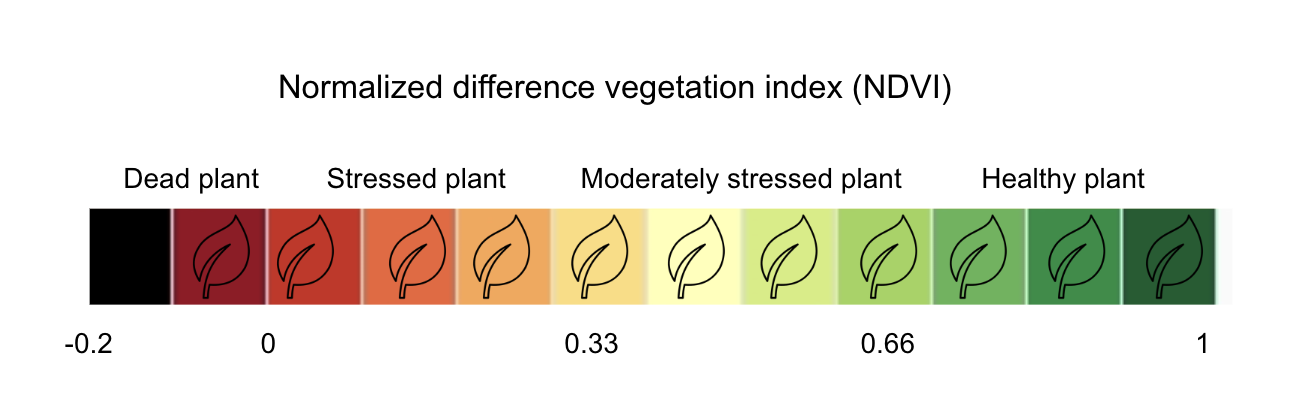

Most of the multispectral broadband indices make use of the inverse relationship between the lower reflectance in the red (through chlorophyll absorption) and higher reflectance in the near-infrared (through leaf structure) to provide a measure of greenness that can be indirectly related to biochemical or structural vegetation properties (e.g., chlorophyll content, LAI). The Normalized Difference Vegetation Index (NDVI) is one of the most commonly used broadband VIs:

\[NDVI = \frac{\rho_{nir} - \rho_{red} }{\rho_{nir} + \rho_{red}}\]

The interpretation of the absolute value of the NDVI is highly informative, as it allows the immediate recognition of the areas of the farm or field that have problems. The NDVI is a simple index to interpret: its values vary between -1 and 1, and each value corresponds to a different agronomic situation, regardless of the crop Figure 8.5

8.3 Remote sensing of crop diseases

8.3.1 Detection of plant stress

One popular use of remote sensing is in diagnosis and monitoring of plant responses to biotic (i.e. disease and insect damage) and abiotic stress (e.g. water stress, heat, high light, pollutants) with hundreds of publications on the topic. It is worth nothing that most available techniques monitor the plant response rather than the stress itself. For example, with some diseases, it is common to estimate changes in canopy cover (using vegetation indices) as measures of “disease” but this measure could also be associated to water deficit (Jones and Vaughan 2010). This highlights the importance of measuring crop conditions in the field & laboratory to collect reliable data and be able to disentangle complex plant responses. Anyway, remote sensing can be used as the first step in site-specific disease control and also to phenotype the reactions of plant genotypes to pathogen attack (Lowe et al. 2017).

8.3.2 Optical methods for measuring crop disease

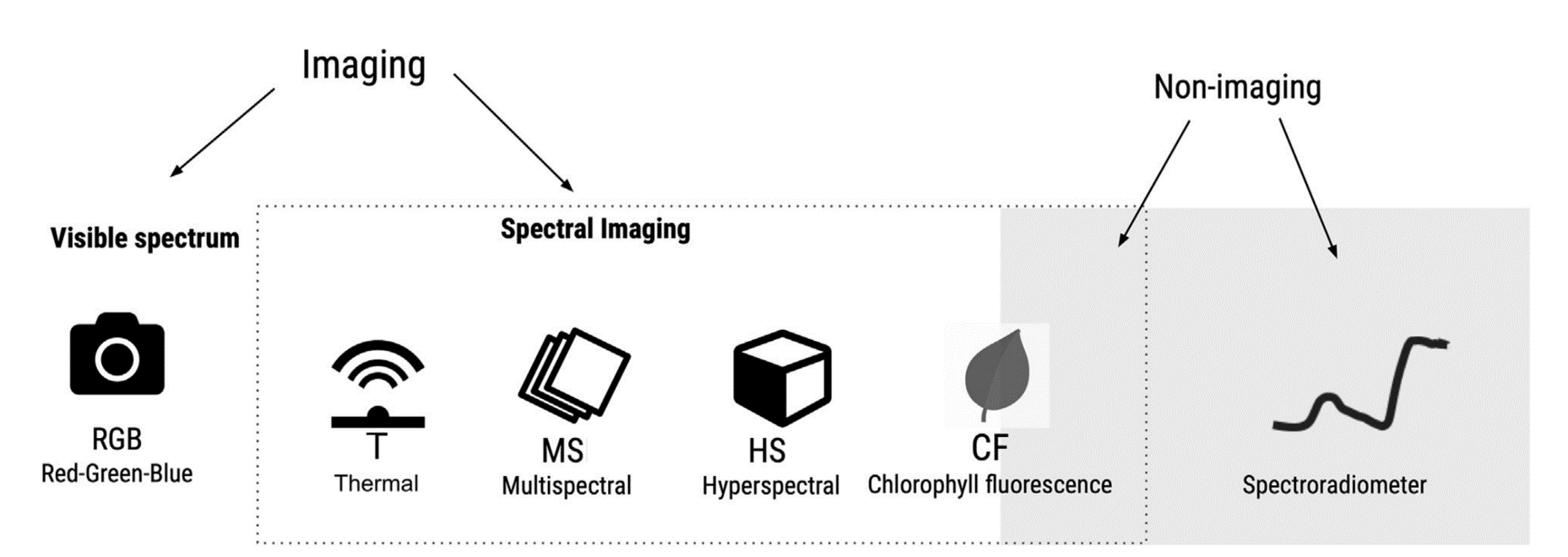

There are a variety of optical sensors for the assessment of plant diseases. Sensors can be based only on the visible spectrum (400-700 nm) or on the visible and/or infrared spectrum (700 nm - 1mm). The latter may include near-infrared (NIR) (0.75-1.4 \(μm\)), short wavelength infrared (SWIR) (1.4–3 \(μm\)), medium wavelength infrared (MWIR) (3-8 \(μm\)), or thermal infrared (8-15 \(μm\)) Figure 8.6. Sensors record either imaging or non imaging (i.e average) spectral radiance values which need to be converted to reflectance before conducting any crop disease monitoring task.

In a recent chapter of Agrio’s Plant Pathology, Del Ponte et al. (2024) highlights the importance of understanding the basic principles of the interaction of light with plant tissue or the plant canopy as a crucial prerrequisite for the analysis and interpretation for disease assessment. When a plant is infected, there are changes to the phisiology and biochemistry of the host, with the eventual development of disease symptoms and/or signs of the pathogen which may be accompanied by structural and biochemical changes that affect absorbance, transmittance, and reflectance of light Figure 8.8.

8.3.3 Scopes of disease sensing

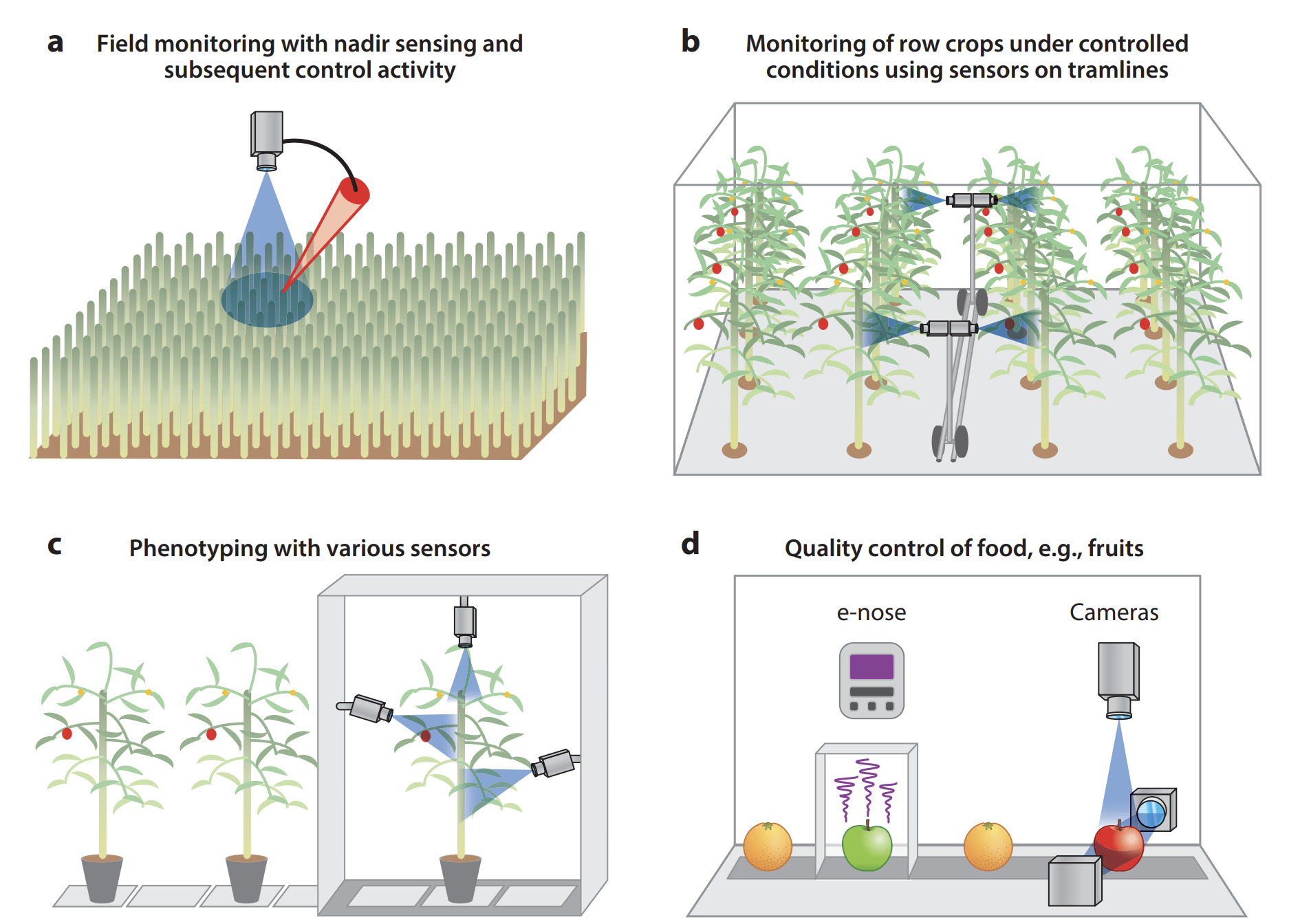

The quantification of typical disease symptoms (disease severity) and assessment of leaves infected by several pathogens are relatively simple for imaging systems but may become a challenge for nonimaging sensors and sensors with inadequate spatial resolution (Oerke 2020). Systematic monitoring of a crop by remote sensors can allow farmers to take preventive actions if infections are detected early. Remote sensing sensors & processing techniques need to be carefully selected to be capable of (a) detecting a deviation in the crop’s health status brought about by pathogens, (b) identifying the disease, and (c) quantifying the severity of the disease. Remote sensing can also be effectively used in (d) food quality control Figure 8.8.

8.3.4 Monitoring plant diseases

Sensing of plants for precision disease control is done in large fields or greenhouses where the aim is to detect the occurrence of diseases at the early stages of epidemics, i.e., at low symptom frequency. Lowe et al. (2017) reviewed hyperspectral imaging of plant diseases, focusing on early detection of diseases for crop monitoring. They report several analysis techniques successfully used for the detection of biotic and abiotic stresses with reported levels of accuracy higher than 80%.

| Technique | Plant (stress) |

|---|---|

| Quadratic discriminant analysis (QDA) | Wheat (yellow rust) |

| Avacado (laurel wilt) | |

| Decision tree (DT) | Avacado (laurel wilt) |

| Sugarbeet (cerospora leaf spot) | |

| Sugarbeet (powdery mildew) | |

| Sugarbeet (leaf rust) | |

| Multilayer perceptron (MLP) | Wheat (yellow rust) |

| Partial least square regression (PLSR) | Celery (sclerotinia rot) |

| Raw | |

| Savitsky-Golay 1st derivative | |

| Savitsky-Golay 2nd derivative | |

| Partial least square regression (PLSR) | Wheat (yellow rust) |

| Fishers linear determinant analysis | Wheat (aphid) |

| Wheat (powdery mildew) | |

| Wheat (powdery mildew) | |

| Erosion and dilation | Cucumber (downey mildew) |

| Spectral angle mapper (SAM) | Sugarbeet (cerospora leaf spot) |

| Sugarbeet (powdery mildew) | |

| Sugarbeet (leaf rust) | |

| Wheat (head blight) | |

| Artificial neural network (ANN) | Sugarbeet (cerospora leaf spot) |

| Sugarbeet (powdery mildew) | |

| Sugarbeet (leaf rust) | |

| Support vector machine (SVM) | Sugarbeet (cerospora leaf spot) |

| Sugarbeet (powdery mildew) | |

| Sugarbeet (leaf rust) | |

| Barley (drought) | |

| Spectral information divergence (SID) | Grapefruit |

| (canker, greasy spot, insect | |

| damage, scab, wind scar) |

Lowe et al. (2017) state that remote sensing of diseases under production conditions is challenging because of variable environmental factors and crop-intrinsic characteristics, e.g., 3D architecture, various growth stages, variety of diseases that may occur simultaneously, and the high sensitivity required to reliably perceive low disease levels suitable for decision-making in disease control. The use of less sensitive systems may be restricted to the assessment of crop damage and yield losses due to diseases.

8.3.5 UAV applications for plant disease detection and monitoring

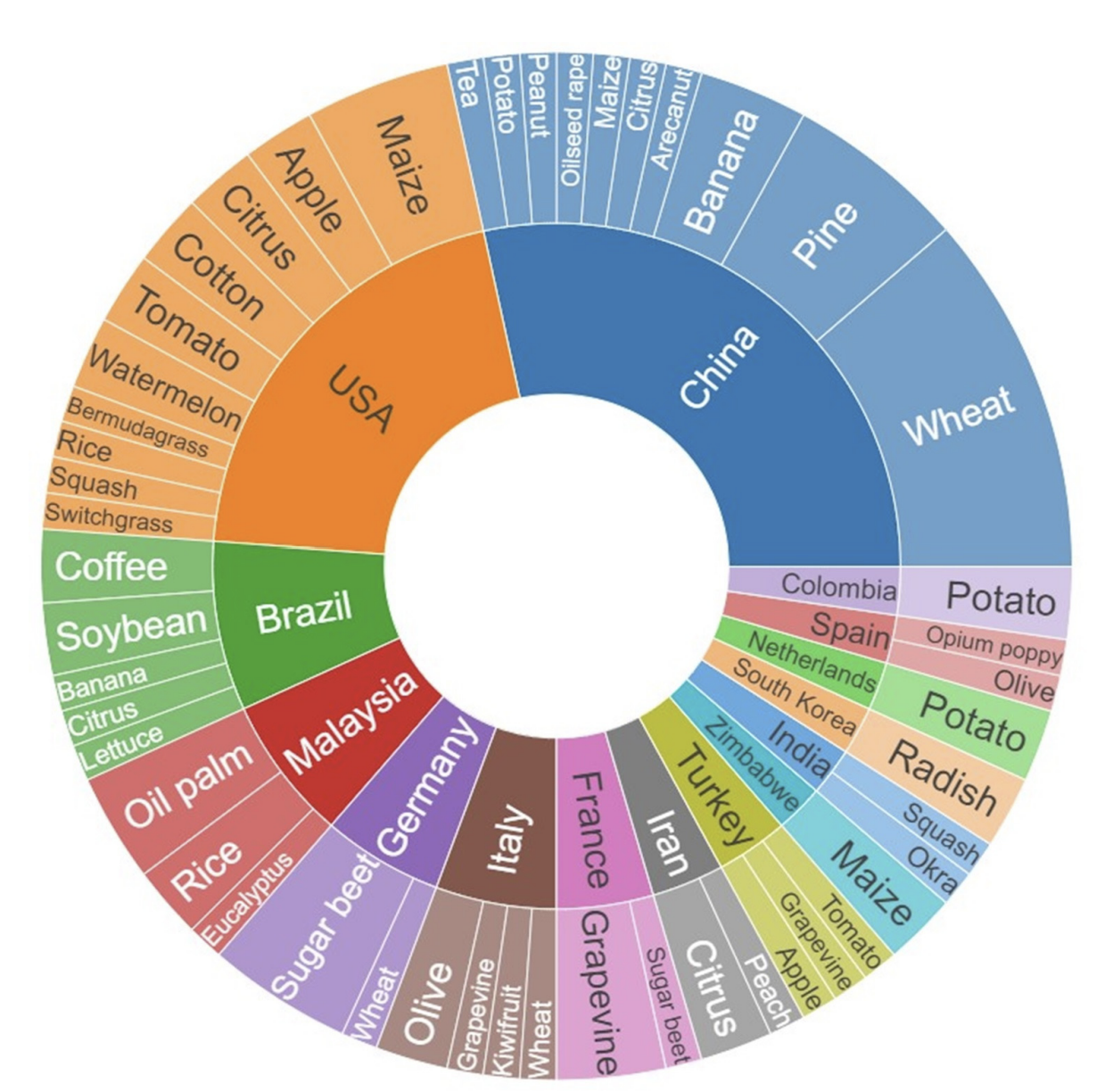

Kouadio et al. (2023) undertook a systematic quantitative literature review to summarize existing literature in UAV-based applications for plant disease detection and monitoring. Results reveal a global disparity in research on the topic, with Asian countries being the top contributing countries. World regions such as Oceania and Africa exhibit comparatively lesser representation. To date, research has largely focused on diseases affecting wheat, sugar beet, potato, maize, and grapevine Figure 8.9. Multispectral, red-green-blue, and hyperspectral sensors were most often used to detect and identify disease symptoms, with current trends pointing to approaches integrating multiple sensors and the use of machine learning and deep learning techniques. The authors suggest that future research should prioritize (i) development of cost-effective and user-friendly UAVs, (ii) integration with emerging agricultural technologies, (iii) improved data acquisition and processing efficiency (iv) diverse testing scenarios, and (v) ethical considerations through proper regulations.

8.4 Disease detection

This section illustrates the use of unmanned aerial vehicle (UAV) remote sensing imagery for identifying banana wilt disease. Fusarium wilt of banana, also known as “banana cancer”, threatens banana production areas worldwide. Timely and accurate identification of Fusarium wilt disease is crucial for effective disease control and optimizing agricultural planting structure (Pegg et al. 2019).

A common initial symptom of this disease is the appearance of a faint pale yellow streak at the base of the petiole of the oldest leaf. This is followed by leaf chlorosis which progresses from lower to upper leaves, wilting of leaves and longitudinal splitting of their bases. Pseudostem splitting of leaf bases is more common in young, rapidly growing plants [Pegg et al. (2019)]Figure 8.10.

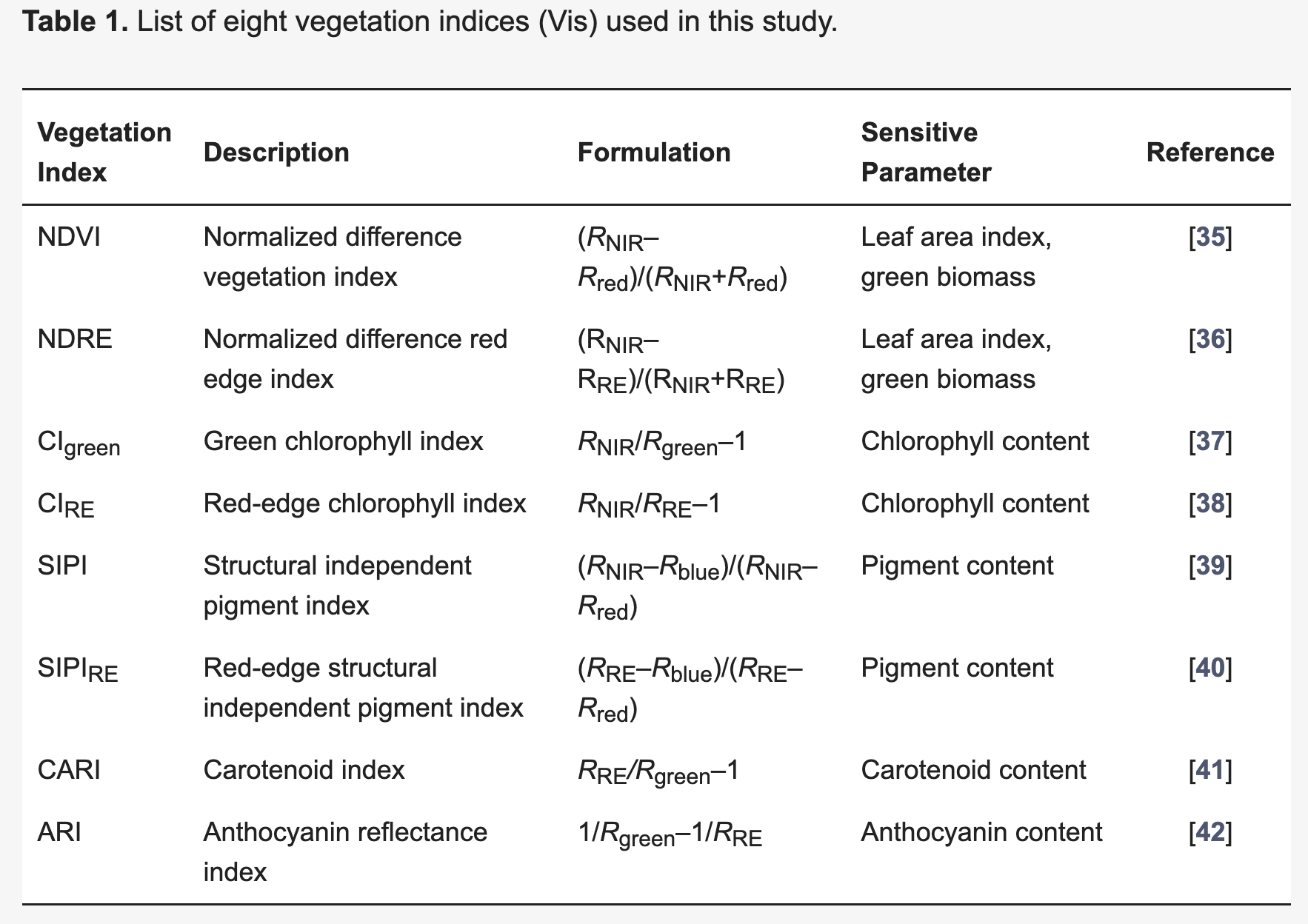

Ye et al. (2020) made publicly available experimental data (Huichun YE et al. 2022) on wilted banana plants collected in a banana plantation located in Long’an County, Guangxi (China). The data set includes UAV multispectral reflectance data and ground survey data on the incidence of banana wilt disease. The paper by Ye et al. (2020) reports that the banana Fusarium wilt disease can be easily identified using several vegetation indices (VIs) obtained from this data set. Tested VIs include green chlorophyll index (CIgreen), red-edge chlorophyll index (CIRE), normalized difference vegetation index (NDVI), and normalized difference red-edge index (NDRE). The dataset can be downloaded from here.

8.4.1 Software setup

Let’s start by cleaning up R memory:

Then, we need to install several packages (if they are not installed yet):

list.of.packages <- c("terra",

"tidyterra",

"stars",

"sf",

"leaflet",

"leafem",

"dplyr",

"ggplot2",

"tidymodels")

new.packages <- list.of.packages[!(list.of.packages %in% installed.packages()[,"Package"])]

if(length(new.packages)) install.packages(new.packages)Now, let’s load all the required packages:

library(terra)

library(tidyterra)

library(stars)

library(sf)

library(leaflet)

library(leafem)

library(dplyr)

library(ggplot2)

library(tidymodels)8.4.2 Reading the dataset

Next code supposes you have already downloaded the Huichun YE et al. (2022) dataset and unzipped its content under the data/banana_data directory.

8.4.3 File formats

Let’s list the files under each subfolder:

list.files("data/banana_data/1_UAV multispectral reflectance")[1] "UAV multispectral reflectance.tfw"

[2] "UAV multispectral reflectance.tif"

[3] "UAV multispectral reflectance.tif.aux.xml"

[4] "UAV multispectral reflectance.tif.ovr" Note that the .tif file contains an orthophotomosaic of surface reflectance. It was created from UAV images taken with a Micasense Red Edge M camera which has five narrow spectral bands: Blue (465–485 nm), green (550–570 nm), red (653–673 nm), red edge (712–722 nm), and near-infrared (800–880 nm). We assume here that those images have been radiometrically and geometrically corrected.

list.files("data/banana_data/2_Ground survey data of banana Fusarium wilt")[1] "Ground_survey_data_of_banana_Fusarium_wilt.dbf"

[2] "Ground_survey_data_of_banana_Fusarium_wilt.prj"

[3] "Ground_survey_data_of_banana_Fusarium_wilt.sbn"

[4] "Ground_survey_data_of_banana_Fusarium_wilt.sbx"

[5] "Ground_survey_data_of_banana_Fusarium_wilt.shp"

[6] "Ground_survey_data_of_banana_Fusarium_wilt.shp.xml"

[7] "Ground_survey_data_of_banana_Fusarium_wilt.shx" This is shapefile with 80 points where the plant health status was collected in same date as the images.

list.files("data/banana_data/3_Boundary of banana planting region")[1] "Boundary_of_banana_planting_region.dbf"

[2] "Boundary_of_banana_planting_region.prj"

[3] "Boundary_of_banana_planting_region.sbn"

[4] "Boundary_of_banana_planting_region.sbx"

[5] "Boundary_of_banana_planting_region.shp"

[6] "Boundary_of_banana_planting_region.shp.xml"

[7] "Boundary_of_banana_planting_region.shx" This is a shapefile with one polygon representing the boundary of the study area.

8.4.4 Read the orthomosaic and the ground data

Now, let’s read the orthomosaic using the terra package:

# Open the tif

tif <- "data/banana_data/1_UAV multispectral reflectance/UAV multispectral reflectance.tif"

rrr <- terra::rast(tif)Let’s check what we get:

rrrclass : SpatRaster

dimensions : 7885, 14420, 5 (nrow, ncol, nlyr)

resolution : 0.08, 0.08 (x, y)

extent : 779257.9, 780411.5, 2560496, 2561127 (xmin, xmax, ymin, ymax)

coord. ref. : WGS 84 / UTM zone 48N (EPSG:32648)

source : UAV multispectral reflectance.tif

names : UAV mu~ance_1, UAV mu~ance_2, UAV mu~ance_3, UAV mu~ance_4, UAV mu~ance_5

min values : 0.000000, 0.000000, 0.000000, 0.0000000, 0.000000

max values : 1.272638, 1.119109, 1.075701, 0.9651694, 1.069767 Note that this is a 5-band multispectral image with 8 cm pixel size.

Now, let’s read the ground data:

shp <- "data/banana_data/2_Ground survey data of banana Fusarium wilt/Ground_survey_data_of_banana_Fusarium_wilt.shp"

ggg <- sf::st_read(shp)Reading layer `Ground_survey_data_of_banana_Fusarium_wilt' from data source

`/Users/emersondelponte/Documents/GitHub/epidemiology-R/data/banana_data/2_Ground survey data of banana Fusarium wilt/Ground_survey_data_of_banana_Fusarium_wilt.shp'

using driver `ESRI Shapefile'

Simple feature collection with 80 features and 4 fields

Geometry type: POINT

Dimension: XY

Bounding box: xmin: 779548.9 ymin: 2560702 xmax: 780097 ymax: 2561020

Projected CRS: WGS 84 / UTM zone 48NWhat we got?

gggSimple feature collection with 80 features and 4 fields

Geometry type: POINT

Dimension: XY

Bounding box: xmin: 779548.9 ymin: 2560702 xmax: 780097 ymax: 2561020

Projected CRS: WGS 84 / UTM zone 48N

First 10 features:

OBJECTID 样点类型 x_经度 y_纬度 geometry

1 1 健康植株 107.7326 23.13240 POINT (779838.5 2560800)

2 2 健康植株 107.7332 23.13316 POINT (779901.2 2560885)

3 3 健康植株 107.7334 23.13394 POINT (779920.1 2560971)

4 4 健康植株 107.7326 23.13430 POINT (779837.5 2561010)

5 5 健康植株 107.7302 23.13225 POINT (779595.2 2560779)

6 6 健康植株 107.7301 23.13190 POINT (779584.6 2560739)

7 7 健康植株 107.7300 23.13297 POINT (779569.6 2560857)

8 8 健康植株 107.7315 23.13301 POINT (779729.4 2560865)

9 9 健康植株 107.7313 23.13245 POINT (779710.5 2560803)

10 10 健康植株 107.7349 23.13307 POINT (780078.9 2560879)Note that the attributes are in Chinese language. It seems that we will need to do several changes.

8.4.5 Visualizing the data

As the orthomosaic is too heavy to visualize, we will need a coarser version of it. Let’s use the terra package for doing it.

rrr8 <- terra::aggregate(rrr, 8)

|---------|---------|---------|---------|

=========================================

#terra <- resample(elev, template, method='bilinear')Let’s check the output:

rrr8class : SpatRaster

dimensions : 986, 1803, 5 (nrow, ncol, nlyr)

resolution : 0.64, 0.64 (x, y)

extent : 779257.9, 780411.8, 2560496, 2561127 (xmin, xmax, ymin, ymax)

coord. ref. : WGS 84 / UTM zone 48N (EPSG:32648)

source(s) : memory

names : UAV mu~ance_1, UAV mu~ance_2, UAV mu~ance_3, UAV mu~ance_4, UAV mu~ance_5

min values : 0.000000, 0.000000, 0.000000, 0.000000, 0.000000

max values : 1.272638, 1.119109, 1.075701, 0.949925, 1.069767 Note that the pixel size of the aggregated raster is 64 cm. Now, in order to visualize the ground points, we will need a color palette:

pal <- colorFactor(

palette = c('green', 'red'),

domain = ggg$样点类型

)Then, we will use the leaflet package to plot the new image and the ground points:

leaflet(data = ggg) |>

addProviderTiles("Esri.WorldImagery") |>

addRasterImage(rrr8) |>

addCircleMarkers(~x_经度, ~y_纬度,

radius = 5,

label = ~样点类型,

fillColor = ~pal(样点类型),

fillOpacity = 1,

stroke = F)8.4.6 Extracting image values at sampled points

Now we will extract raster values at point locations using the st_extract() function from the {stars} library. It is expected that a value per band is extracted at each point.

We need to convert the raster object into a stars object:

sss <- st_as_stars(rrr)What we got?

sssstars_proxy object with 1 attribute in 1 file(s):

$`UAV multispectral reflectance.tif`

[1] "[...]/UAV multispectral reflectance.tif"

dimension(s):

from to offset delta refsys point x/y

x 1 14420 779258 0.08 WGS 84 / UTM zone 48N FALSE [x]

y 1 7885 2561127 -0.08 WGS 84 / UTM zone 48N FALSE [y]

band 1 5 NA NA NA NA Before conducting the extraction task, it is advisable to collect band values not at a single pixel but at a small window (e.g. 3x3 pixels). Thus, we will start creating 20cm buffers at each site:

poly <- st_buffer(ggg, dist = 0.20)Now, the extraction task:

# Extract the median value per polygon

buf_values <- aggregate(sss, poly, FUN = median) |>

st_as_sf()What we got:

buf_valuesSimple feature collection with 80 features and 5 fields

Geometry type: POLYGON

Dimension: XY

Bounding box: xmin: 779548.7 ymin: 2560702 xmax: 780097.2 ymax: 2561021

Projected CRS: WGS 84 / UTM zone 48N

First 10 features:

UAV multispectral reflectance.tif.V1 UAV multispectral reflectance.tif.V2

1 0.2837147 0.3570867

2 0.3096394 0.4598360

3 0.2652082 0.3556182

4 0.2729177 0.3626878

5 0.3033864 0.3089822

6 0.2993084 0.3655908

7 0.2471483 0.2859972

8 0.2491679 0.3223873

9 0.3213297 0.4069843

10 0.2976966 0.3430840

UAV multispectral reflectance.tif.V3 UAV multispectral reflectance.tif.V4

1 0.2049306 0.6370987

2 0.2058005 0.6609572

3 0.1769855 0.5988070

4 0.1828043 0.7094992

5 0.1894684 0.6805743

6 0.1988409 0.7750074

7 0.1880568 0.5221797

8 0.1855947 0.5934904

9 0.2013687 0.5593004

10 0.2144508 0.8057290

UAV multispectral reflectance.tif.V5 geometry

1 0.4779058 POLYGON ((779838.7 2560800,...

2 0.5714727 POLYGON ((779901.4 2560885,...

3 0.4352523 POLYGON ((779920.3 2560971,...

4 0.5149931 POLYGON ((779837.7 2561010,...

5 0.4008912 POLYGON ((779595.4 2560779,...

6 0.4967270 POLYGON ((779584.8 2560739,...

7 0.3308629 POLYGON ((779569.8 2560857,...

8 0.4570443 POLYGON ((779729.6 2560865,...

9 0.5094757 POLYGON ((779710.7 2560803,...

10 0.5005793 POLYGON ((780079.1 2560879,...Note that names of bands are weird:

names(buf_values)[1] "UAV multispectral reflectance.tif.V1"

[2] "UAV multispectral reflectance.tif.V2"

[3] "UAV multispectral reflectance.tif.V3"

[4] "UAV multispectral reflectance.tif.V4"

[5] "UAV multispectral reflectance.tif.V5"

[6] "geometry" Let’s rename band values:

buf_values |> rename(blue = "UAV multispectral reflectance.tif.V1",

red = "UAV multispectral reflectance.tif.V2",

green = "UAV multispectral reflectance.tif.V3",

redge = "UAV multispectral reflectance.tif.V4",

nir = "UAV multispectral reflectance.tif.V5") -> buf_values2Now, we got shorter names per band:

buf_values2Simple feature collection with 80 features and 5 fields

Geometry type: POLYGON

Dimension: XY

Bounding box: xmin: 779548.7 ymin: 2560702 xmax: 780097.2 ymax: 2561021

Projected CRS: WGS 84 / UTM zone 48N

First 10 features:

blue red green redge nir

1 0.2837147 0.3570867 0.2049306 0.6370987 0.4779058

2 0.3096394 0.4598360 0.2058005 0.6609572 0.5714727

3 0.2652082 0.3556182 0.1769855 0.5988070 0.4352523

4 0.2729177 0.3626878 0.1828043 0.7094992 0.5149931

5 0.3033864 0.3089822 0.1894684 0.6805743 0.4008912

6 0.2993084 0.3655908 0.1988409 0.7750074 0.4967270

7 0.2471483 0.2859972 0.1880568 0.5221797 0.3308629

8 0.2491679 0.3223873 0.1855947 0.5934904 0.4570443

9 0.3213297 0.4069843 0.2013687 0.5593004 0.5094757

10 0.2976966 0.3430840 0.2144508 0.8057290 0.5005793

geometry

1 POLYGON ((779838.7 2560800,...

2 POLYGON ((779901.4 2560885,...

3 POLYGON ((779920.3 2560971,...

4 POLYGON ((779837.7 2561010,...

5 POLYGON ((779595.4 2560779,...

6 POLYGON ((779584.8 2560739,...

7 POLYGON ((779569.8 2560857,...

8 POLYGON ((779729.6 2560865,...

9 POLYGON ((779710.7 2560803,...

10 POLYGON ((780079.1 2560879,...8.4.7 Computing vegetation indices

Ye et al. (2020) used the following indices Figure 8.11:

Thus, we will compute several of those indices:

buf_indices <- buf_values2 |>

mutate(ndvi = (nir - red) / (nir+red),

ndre = (nir - redge) / (nir+redge),

cire = (nir) / (redge-1),

sipi = (nir - blue) / (redge-red)

) |> select(ndvi, ndre, cire, sipi)What we got:

buf_indicesSimple feature collection with 80 features and 4 fields

Geometry type: POLYGON

Dimension: XY

Bounding box: xmin: 779548.7 ymin: 2560702 xmax: 780097.2 ymax: 2561021

Projected CRS: WGS 84 / UTM zone 48N

First 10 features:

ndvi ndre cire sipi geometry

1 0.14469485 -0.14277326 -1.3169030 0.6935100 POLYGON ((779838.7 2560800,...

2 0.10824757 -0.07260820 -1.6855475 1.3018684 POLYGON ((779901.4 2560885,...

3 0.10069173 -0.15816766 -1.0848951 0.6992264 POLYGON ((779920.3 2560971,...

4 0.17353146 -0.15884638 -1.7727770 0.6980030 POLYGON ((779837.7 2561010,...

5 0.12947229 -0.25861496 -1.2550373 0.2623973 POLYGON ((779595.4 2560779,...

6 0.15207400 -0.21881961 -2.2077477 0.4821950 POLYGON ((779584.8 2560739,...

7 0.07273232 -0.22427574 -0.6924421 0.3544487 POLYGON ((779569.8 2560857,...

8 0.17276311 -0.12988254 -1.1243137 0.7667796 POLYGON ((779729.6 2560865,...

9 0.11183404 -0.04661848 -1.1560613 1.2352333 POLYGON ((779710.7 2560803,...

10 0.18668031 -0.23359699 -2.5767059 0.4385279 POLYGON ((780079.1 2560879,...Note that the health status is missing in buf_indices. Therefore, we will need to use a spatial join to link such status:

samples <- st_join(

ggg,

buf_indices,

join = st_intersects)Let’s check the output:

samplesSimple feature collection with 80 features and 8 fields

Geometry type: POINT

Dimension: XY

Bounding box: xmin: 779548.9 ymin: 2560702 xmax: 780097 ymax: 2561020

Projected CRS: WGS 84 / UTM zone 48N

First 10 features:

OBJECTID 样点类型 x_经度 y_纬度 ndvi ndre cire

1 1 健康植株 107.7326 23.13240 0.14469485 -0.14277326 -1.3169030

2 2 健康植株 107.7332 23.13316 0.10824757 -0.07260820 -1.6855475

3 3 健康植株 107.7334 23.13394 0.10069173 -0.15816766 -1.0848951

4 4 健康植株 107.7326 23.13430 0.17353146 -0.15884638 -1.7727770

5 5 健康植株 107.7302 23.13225 0.12947229 -0.25861496 -1.2550373

6 6 健康植株 107.7301 23.13190 0.15207400 -0.21881961 -2.2077477

7 7 健康植株 107.7300 23.13297 0.07273232 -0.22427574 -0.6924421

8 8 健康植株 107.7315 23.13301 0.17276311 -0.12988254 -1.1243137

9 9 健康植株 107.7313 23.13245 0.11183404 -0.04661848 -1.1560613

10 10 健康植株 107.7349 23.13307 0.18668031 -0.23359699 -2.5767059

sipi geometry

1 0.6935100 POINT (779838.5 2560800)

2 1.3018684 POINT (779901.2 2560885)

3 0.6992264 POINT (779920.1 2560971)

4 0.6980030 POINT (779837.5 2561010)

5 0.2623973 POINT (779595.2 2560779)

6 0.4821950 POINT (779584.6 2560739)

7 0.3544487 POINT (779569.6 2560857)

8 0.7667796 POINT (779729.4 2560865)

9 1.2352333 POINT (779710.5 2560803)

10 0.4385279 POINT (780078.9 2560879)It seems we succeeded.

unique(samples$样点类型)[1] "健康植株" "枯萎病植株"Let’s check it:

samplesSimple feature collection with 80 features and 8 fields

Geometry type: POINT

Dimension: XY

Bounding box: xmin: 779548.9 ymin: 2560702 xmax: 780097 ymax: 2561020

Projected CRS: WGS 84 / UTM zone 48N

First 10 features:

OBJECTID 样点类型 x_经度 y_纬度 ndvi ndre cire

1 1 健康植株 107.7326 23.13240 0.14469485 -0.14277326 -1.3169030

2 2 健康植株 107.7332 23.13316 0.10824757 -0.07260820 -1.6855475

3 3 健康植株 107.7334 23.13394 0.10069173 -0.15816766 -1.0848951

4 4 健康植株 107.7326 23.13430 0.17353146 -0.15884638 -1.7727770

5 5 健康植株 107.7302 23.13225 0.12947229 -0.25861496 -1.2550373

6 6 健康植株 107.7301 23.13190 0.15207400 -0.21881961 -2.2077477

7 7 健康植株 107.7300 23.13297 0.07273232 -0.22427574 -0.6924421

8 8 健康植株 107.7315 23.13301 0.17276311 -0.12988254 -1.1243137

9 9 健康植株 107.7313 23.13245 0.11183404 -0.04661848 -1.1560613

10 10 健康植株 107.7349 23.13307 0.18668031 -0.23359699 -2.5767059

sipi geometry

1 0.6935100 POINT (779838.5 2560800)

2 1.3018684 POINT (779901.2 2560885)

3 0.6992264 POINT (779920.1 2560971)

4 0.6980030 POINT (779837.5 2561010)

5 0.2623973 POINT (779595.2 2560779)

6 0.4821950 POINT (779584.6 2560739)

7 0.3544487 POINT (779569.6 2560857)

8 0.7667796 POINT (779729.4 2560865)

9 1.2352333 POINT (779710.5 2560803)

10 0.4385279 POINT (780078.9 2560879)Now, we will replace the Chinese words for English words:

samples |>

mutate(score = ifelse(样点类型 == '健康植株', 'healthy', 'wilted')) |>

rename(east = x_经度,

north = y_纬度 ) |>

select(OBJECTID,score, ndvi, ndre, cire, sipi) -> nsamplesLet’s check the output:

nsamplesSimple feature collection with 80 features and 6 fields

Geometry type: POINT

Dimension: XY

Bounding box: xmin: 779548.9 ymin: 2560702 xmax: 780097 ymax: 2561020

Projected CRS: WGS 84 / UTM zone 48N

First 10 features:

OBJECTID score ndvi ndre cire sipi

1 1 healthy 0.14469485 -0.14277326 -1.3169030 0.6935100

2 2 healthy 0.10824757 -0.07260820 -1.6855475 1.3018684

3 3 healthy 0.10069173 -0.15816766 -1.0848951 0.6992264

4 4 healthy 0.17353146 -0.15884638 -1.7727770 0.6980030

5 5 healthy 0.12947229 -0.25861496 -1.2550373 0.2623973

6 6 healthy 0.15207400 -0.21881961 -2.2077477 0.4821950

7 7 healthy 0.07273232 -0.22427574 -0.6924421 0.3544487

8 8 healthy 0.17276311 -0.12988254 -1.1243137 0.7667796

9 9 healthy 0.11183404 -0.04661848 -1.1560613 1.2352333

10 10 healthy 0.18668031 -0.23359699 -2.5767059 0.4385279

geometry

1 POINT (779838.5 2560800)

2 POINT (779901.2 2560885)

3 POINT (779920.1 2560971)

4 POINT (779837.5 2561010)

5 POINT (779595.2 2560779)

6 POINT (779584.6 2560739)

7 POINT (779569.6 2560857)

8 POINT (779729.4 2560865)

9 POINT (779710.5 2560803)

10 POINT (780078.9 2560879)As we will not intend to use the geometry in our model, we can remove it:

st_geometry(nsamples) <- NULLLet’s check the output:

nsamples OBJECTID score ndvi ndre cire sipi

1 1 healthy 0.14469485 -0.142773261 -1.3169030 0.6935100

2 2 healthy 0.10824757 -0.072608196 -1.6855475 1.3018684

3 3 healthy 0.10069173 -0.158167658 -1.0848951 0.6992264

4 4 healthy 0.17353146 -0.158846378 -1.7727770 0.6980030

5 5 healthy 0.12947229 -0.258614958 -1.2550373 0.2623973

6 6 healthy 0.15207400 -0.218819608 -2.2077477 0.4821950

7 7 healthy 0.07273232 -0.224275739 -0.6924421 0.3544487

8 8 healthy 0.17276311 -0.129882540 -1.1243137 0.7667796

9 9 healthy 0.11183404 -0.046618476 -1.1560613 1.2352333

10 10 healthy 0.18668031 -0.233596986 -2.5767059 0.4385279

11 11 healthy 0.18657437 -0.228953927 -1.1653227 0.4062720

12 12 healthy 0.08643499 -0.140911613 -0.8354715 0.6312134

13 13 healthy 0.15674017 -0.196911688 -3.7608107 0.5867844

14 14 wilted 0.11821422 -0.068648057 -0.8355714 1.0732255

15 15 wilted 0.09439201 0.038426164 -1.6886927 3.9928701

16 16 wilted 0.11179310 0.099664192 -1.2926303 19.6185081

17 17 wilted 0.09110226 -0.012808011 -0.6207856 1.8658238

18 18 wilted 0.05483186 0.059516988 -1.0249783 -53.7256928

19 19 wilted 0.10296918 0.050902211 -0.5312504 -2.0705492

20 20 wilted 0.09142250 0.166594498 -1.3738936 -4.5687561

21 21 wilted 0.08282444 0.150849653 -1.0515823 -2.7751200

22 22 wilted 0.09622230 -0.026842737 -1.8046361 1.7472896

23 23 wilted 0.10804655 0.128742778 -3.1489130 -15.9373140

24 24 wilted 0.11759462 -0.271995471 -0.7296435 0.3251888

25 25 wilted 0.14612901 -0.170267124 -0.7535340 0.4340166

26 26 wilted 0.14345746 0.007804645 -1.0917760 1.7195275

27 27 wilted 0.09668988 0.048839732 -0.8969843 3.4761651

28 28 wilted 0.16690104 0.009134113 -1.8204254 1.9231470

29 29 wilted 0.12475996 0.018023671 -1.1985424 2.1736919

30 30 wilted 0.12287255 -0.144575443 -0.7030899 0.5607103

31 31 wilted 0.13276824 0.058621948 -1.4800599 3.5889606

32 32 wilted 0.05301838 -0.056220022 -1.6759833 1.7895973

33 33 wilted 0.14595352 -0.119623392 -1.4439231 0.8741458

34 34 wilted 0.11236478 -0.015965114 -1.0488215 1.6813047

35 35 wilted 0.20042051 0.007888690 -1.9802223 1.6851630

36 36 wilted 0.14792880 -0.186300667 -0.7084196 0.3644668

37 37 wilted 0.11381952 0.049444260 -1.1052035 3.7268769

38 38 wilted 0.12729611 0.049169099 -0.6510980 1.2817601

39 39 wilted 0.16311398 -0.075256088 -1.1007427 0.4779209

40 40 wilted 0.12317018 -0.043296886 -0.7492018 0.9707563

41 41 wilted 0.11177875 0.012897741 -1.8978005 2.8596823

42 42 wilted 0.09087224 0.100019670 -1.0620017 -29.9002728

43 43 wilted 0.15791341 -0.005372302 -1.5073174 1.7255063

44 44 wilted 0.13393783 -0.023050553 -0.8915742 1.0855461

45 45 wilted 0.06276064 -0.058164786 -1.0170161 1.4100601

46 46 wilted 0.14991261 -0.080920969 -1.5173127 1.1947273

47 47 wilted 0.15454129 -0.029567154 -1.4345890 1.8891207

48 48 wilted 0.17448058 0.053204886 -0.9757240 2.8155912

49 49 healthy 0.10767838 -0.279952871 -1.2310963 0.2743482

50 50 wilted 0.11253491 -0.001355745 -1.0156727 2.0230335

51 51 healthy 0.17156708 -0.130486894 -0.9670619 0.5846040

52 52 healthy 0.11201780 -0.240080432 -1.5616428 0.3767992

53 53 healthy 0.11357791 -0.233516246 -0.7266852 0.5174349

54 54 healthy 0.09640389 -0.197173474 -1.6740870 0.6429254

55 55 healthy 0.10371600 -0.148771702 -1.7079973 0.6404103

56 56 healthy 0.13733091 -0.192621610 -1.0920985 0.5104957

57 57 healthy 0.10494961 -0.141993366 -0.9767054 0.7876503

58 58 healthy 0.09594242 -0.238072742 -1.2599388 0.4055777

59 59 healthy 0.12253980 -0.228534207 -0.6240105 0.3125564

60 60 healthy 0.11191907 -0.200302031 -1.6484365 0.4100274

61 61 healthy 0.17497565 -0.348438178 -0.8327460 0.2617154

62 62 healthy 0.06286945 -0.066868925 -1.9457173 0.9082508

63 63 healthy 0.15875140 -0.180227754 -1.1609626 0.5244801

64 64 healthy 0.12946220 -0.116778741 -1.1471938 0.6492517

65 65 healthy 0.13029153 -0.251235648 -1.1507658 0.3096142

66 66 healthy 0.18309513 -0.237002851 -2.1102911 0.4401729

67 67 healthy 0.18231241 -0.191220292 -1.5998799 0.5043085

68 68 healthy 0.12550657 -0.238273304 -0.8386376 0.3827526

69 69 healthy 0.15353233 -0.215340798 -2.9878736 0.5652307

70 70 healthy 0.17299827 -0.279709014 -1.4354651 0.3986933

71 71 healthy 0.10020553 -0.181384162 -1.4309319 0.4048364

72 72 healthy 0.16533571 -0.222465226 -1.4819059 0.4107726

73 73 healthy 0.16081598 0.009196845 -1.1312141 2.2696678

74 74 healthy 0.10260883 -0.288149676 -0.8375206 0.2317196

75 75 healthy 0.19372839 -0.149982883 -2.4120161 0.7065984

76 76 healthy 0.17650050 -0.249396644 -1.5425889 0.4227645

77 77 wilted 0.13466946 -0.014630713 -1.1275266 1.7332531

78 78 wilted 0.14542428 0.035346511 -1.3640391 2.9550260

79 79 wilted 0.16581448 0.097763989 -1.0057000 4.8381045

80 80 wilted 0.07985435 0.045756804 -1.3962020 7.5734216As our task is a binary classification (i.e any site can be either healthy or wilted), the variable to estimate is a factor (not a character).

Let’s change the data type of such variable:

nsamples$score = as.factor(nsamples$score) Let’s check the result:

nsamples OBJECTID score ndvi ndre cire sipi

1 1 healthy 0.14469485 -0.142773261 -1.3169030 0.6935100

2 2 healthy 0.10824757 -0.072608196 -1.6855475 1.3018684

3 3 healthy 0.10069173 -0.158167658 -1.0848951 0.6992264

4 4 healthy 0.17353146 -0.158846378 -1.7727770 0.6980030

5 5 healthy 0.12947229 -0.258614958 -1.2550373 0.2623973

6 6 healthy 0.15207400 -0.218819608 -2.2077477 0.4821950

7 7 healthy 0.07273232 -0.224275739 -0.6924421 0.3544487

8 8 healthy 0.17276311 -0.129882540 -1.1243137 0.7667796

9 9 healthy 0.11183404 -0.046618476 -1.1560613 1.2352333

10 10 healthy 0.18668031 -0.233596986 -2.5767059 0.4385279

11 11 healthy 0.18657437 -0.228953927 -1.1653227 0.4062720

12 12 healthy 0.08643499 -0.140911613 -0.8354715 0.6312134

13 13 healthy 0.15674017 -0.196911688 -3.7608107 0.5867844

14 14 wilted 0.11821422 -0.068648057 -0.8355714 1.0732255

15 15 wilted 0.09439201 0.038426164 -1.6886927 3.9928701

16 16 wilted 0.11179310 0.099664192 -1.2926303 19.6185081

17 17 wilted 0.09110226 -0.012808011 -0.6207856 1.8658238

18 18 wilted 0.05483186 0.059516988 -1.0249783 -53.7256928

19 19 wilted 0.10296918 0.050902211 -0.5312504 -2.0705492

20 20 wilted 0.09142250 0.166594498 -1.3738936 -4.5687561

21 21 wilted 0.08282444 0.150849653 -1.0515823 -2.7751200

22 22 wilted 0.09622230 -0.026842737 -1.8046361 1.7472896

23 23 wilted 0.10804655 0.128742778 -3.1489130 -15.9373140

24 24 wilted 0.11759462 -0.271995471 -0.7296435 0.3251888

25 25 wilted 0.14612901 -0.170267124 -0.7535340 0.4340166

26 26 wilted 0.14345746 0.007804645 -1.0917760 1.7195275

27 27 wilted 0.09668988 0.048839732 -0.8969843 3.4761651

28 28 wilted 0.16690104 0.009134113 -1.8204254 1.9231470

29 29 wilted 0.12475996 0.018023671 -1.1985424 2.1736919

30 30 wilted 0.12287255 -0.144575443 -0.7030899 0.5607103

31 31 wilted 0.13276824 0.058621948 -1.4800599 3.5889606

32 32 wilted 0.05301838 -0.056220022 -1.6759833 1.7895973

33 33 wilted 0.14595352 -0.119623392 -1.4439231 0.8741458

34 34 wilted 0.11236478 -0.015965114 -1.0488215 1.6813047

35 35 wilted 0.20042051 0.007888690 -1.9802223 1.6851630

36 36 wilted 0.14792880 -0.186300667 -0.7084196 0.3644668

37 37 wilted 0.11381952 0.049444260 -1.1052035 3.7268769

38 38 wilted 0.12729611 0.049169099 -0.6510980 1.2817601

39 39 wilted 0.16311398 -0.075256088 -1.1007427 0.4779209

40 40 wilted 0.12317018 -0.043296886 -0.7492018 0.9707563

41 41 wilted 0.11177875 0.012897741 -1.8978005 2.8596823

42 42 wilted 0.09087224 0.100019670 -1.0620017 -29.9002728

43 43 wilted 0.15791341 -0.005372302 -1.5073174 1.7255063

44 44 wilted 0.13393783 -0.023050553 -0.8915742 1.0855461

45 45 wilted 0.06276064 -0.058164786 -1.0170161 1.4100601

46 46 wilted 0.14991261 -0.080920969 -1.5173127 1.1947273

47 47 wilted 0.15454129 -0.029567154 -1.4345890 1.8891207

48 48 wilted 0.17448058 0.053204886 -0.9757240 2.8155912

49 49 healthy 0.10767838 -0.279952871 -1.2310963 0.2743482

50 50 wilted 0.11253491 -0.001355745 -1.0156727 2.0230335

51 51 healthy 0.17156708 -0.130486894 -0.9670619 0.5846040

52 52 healthy 0.11201780 -0.240080432 -1.5616428 0.3767992

53 53 healthy 0.11357791 -0.233516246 -0.7266852 0.5174349

54 54 healthy 0.09640389 -0.197173474 -1.6740870 0.6429254

55 55 healthy 0.10371600 -0.148771702 -1.7079973 0.6404103

56 56 healthy 0.13733091 -0.192621610 -1.0920985 0.5104957

57 57 healthy 0.10494961 -0.141993366 -0.9767054 0.7876503

58 58 healthy 0.09594242 -0.238072742 -1.2599388 0.4055777

59 59 healthy 0.12253980 -0.228534207 -0.6240105 0.3125564

60 60 healthy 0.11191907 -0.200302031 -1.6484365 0.4100274

61 61 healthy 0.17497565 -0.348438178 -0.8327460 0.2617154

62 62 healthy 0.06286945 -0.066868925 -1.9457173 0.9082508

63 63 healthy 0.15875140 -0.180227754 -1.1609626 0.5244801

64 64 healthy 0.12946220 -0.116778741 -1.1471938 0.6492517

65 65 healthy 0.13029153 -0.251235648 -1.1507658 0.3096142

66 66 healthy 0.18309513 -0.237002851 -2.1102911 0.4401729

67 67 healthy 0.18231241 -0.191220292 -1.5998799 0.5043085

68 68 healthy 0.12550657 -0.238273304 -0.8386376 0.3827526

69 69 healthy 0.15353233 -0.215340798 -2.9878736 0.5652307

70 70 healthy 0.17299827 -0.279709014 -1.4354651 0.3986933

71 71 healthy 0.10020553 -0.181384162 -1.4309319 0.4048364

72 72 healthy 0.16533571 -0.222465226 -1.4819059 0.4107726

73 73 healthy 0.16081598 0.009196845 -1.1312141 2.2696678

74 74 healthy 0.10260883 -0.288149676 -0.8375206 0.2317196

75 75 healthy 0.19372839 -0.149982883 -2.4120161 0.7065984

76 76 healthy 0.17650050 -0.249396644 -1.5425889 0.4227645

77 77 wilted 0.13466946 -0.014630713 -1.1275266 1.7332531

78 78 wilted 0.14542428 0.035346511 -1.3640391 2.9550260

79 79 wilted 0.16581448 0.097763989 -1.0057000 4.8381045

80 80 wilted 0.07985435 0.045756804 -1.3962020 7.5734216A simple summary of the extracted data can be useful:

nsamples |>

group_by(score) |>

summarize(n())# A tibble: 2 × 2

score `n()`

<fct> <int>

1 healthy 40

2 wilted 40This mean the dataset is balanced which is very good.

8.4.8 Saving the extracted dataset

Now, let’s save the nsamples object. Just in case R crashes due to lack of memory.

#uncomment if needed

#st_write(nsamples, "./banana_data/nsamples.csv", overwrite=TRUE)8.4.9 Classification of Fusarium wilt using machine learning (ML)

The overall process to classify the crop disease under study will be conducted using the tidymodels framework which is an extension of the tidyverse suite. It is especially focused towards providing a generalized way to define, run and optimize ML models in R.

8.4.9.1 Exploratory analysis

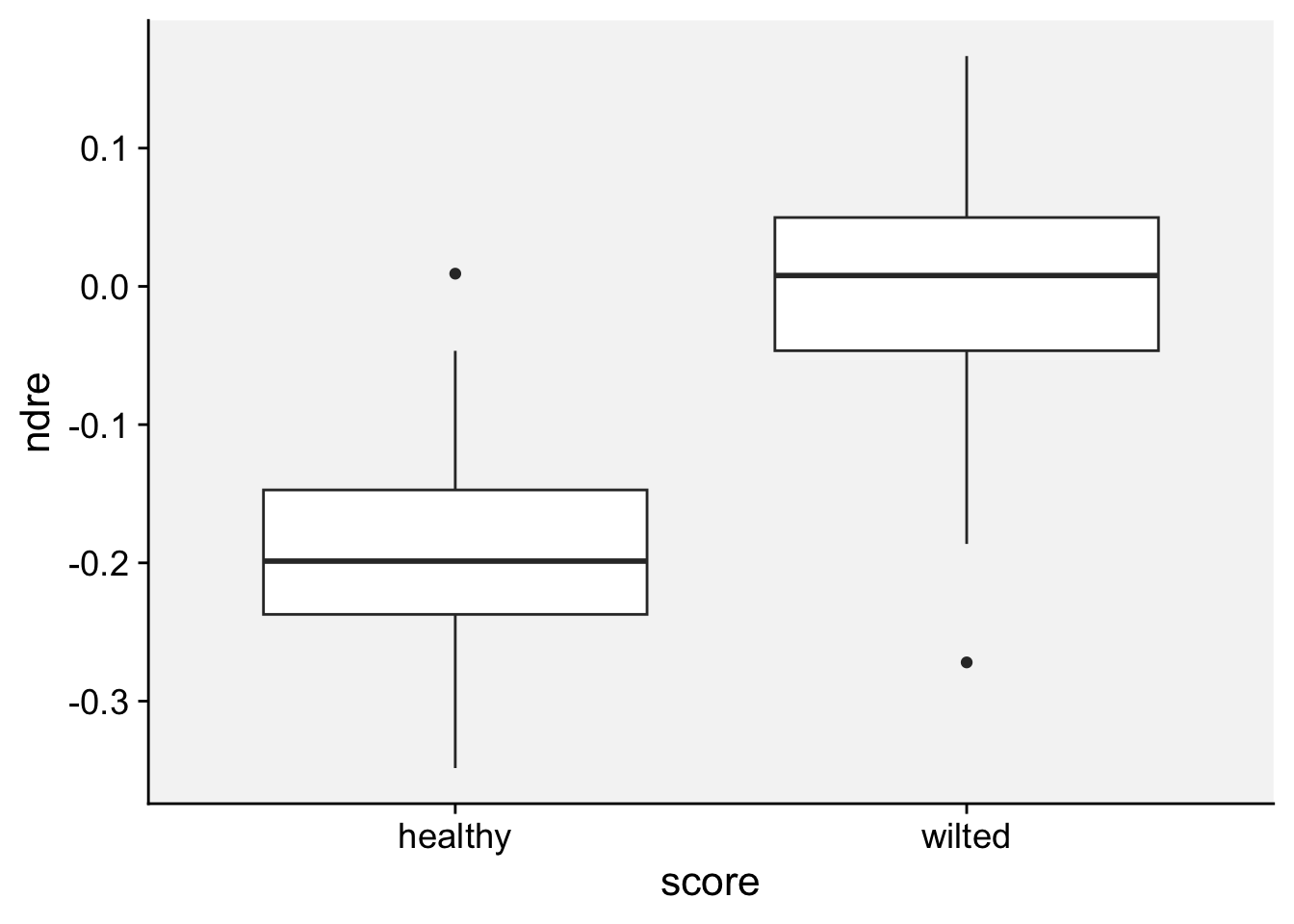

As a first step in modeling, it’s always a good idea to visualize the data. Let’s start with a boxplot to displays the distribution of a vegetation index. It visualizes five summary statistics (the median, two hinges and two whiskers), and all “outlying” points individually.

p <- ggplot(nsamples, aes(score, ndre))+

r4pde::theme_r4pde()Warning: replacing previous import 'car::recode' by 'dplyr::recode' when

loading 'r4pde'p + geom_boxplot()

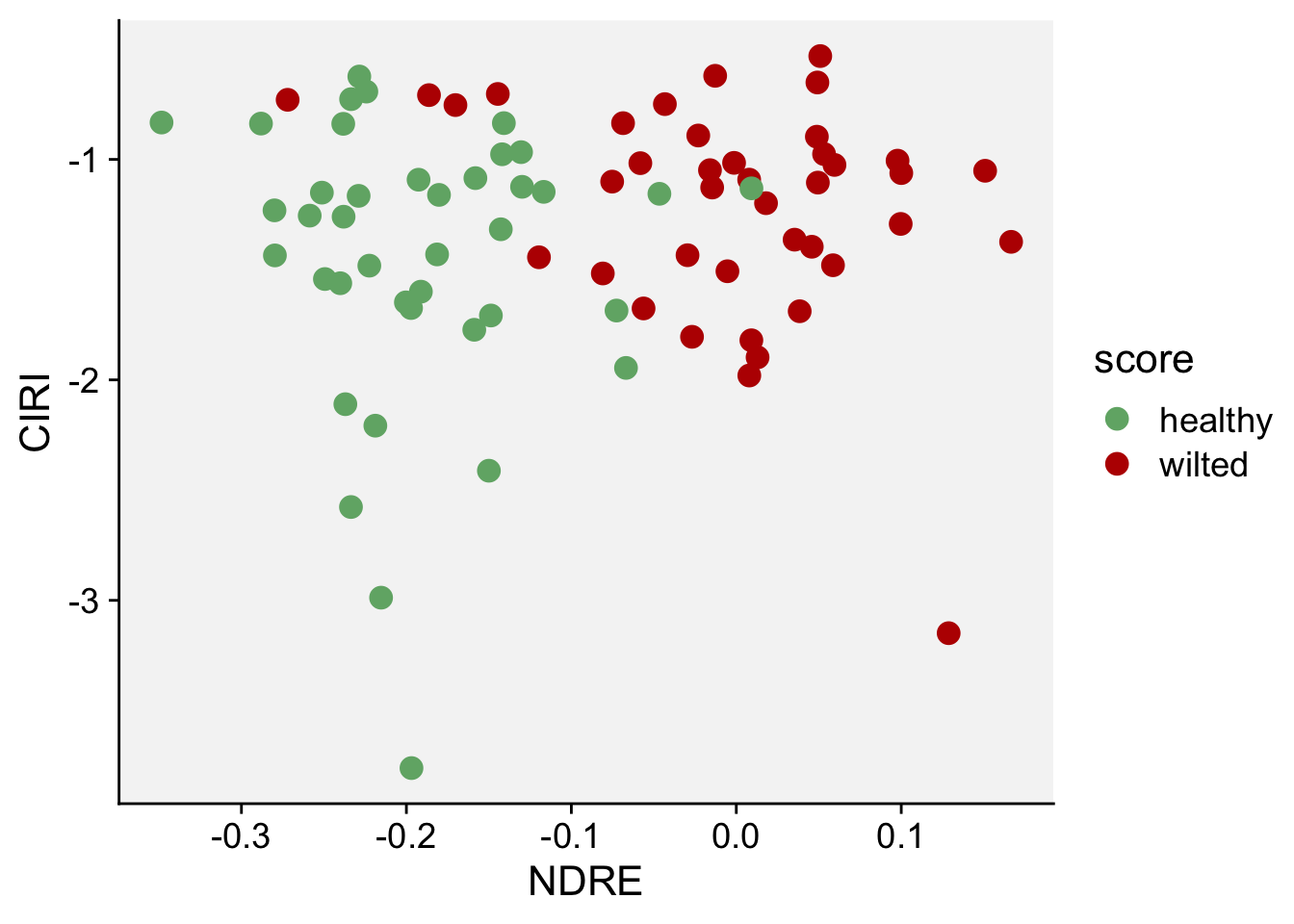

Next, we will do a scatterplot to visualize the indices NDRE and CIRE:

ggplot(nsamples) +

aes(x = ndre, y = cire, color = score) +

geom_point(shape = 16, size = 4) +

labs(x = "NDRE", y = "CIRI") +

r4pde::theme_r4pde() +

scale_color_manual(values = c("#71b075", "#ba0600"))

8.4.9.2 Splitting the data

Next step is to divide the data into a training and a test set. The set.seed() function can be used for reproducibility of the computations that are dependent on random numbers. By default, the training/testing split is 0.75 to 0.25.

set.seed(42)

data_split <- initial_split(data = nsamples)

data_train <- training(data_split)

data_test <- testing(data_split)Let’s check the result:

data_train OBJECTID score ndvi ndre cire sipi

1 49 healthy 0.10767838 -0.279952871 -1.2310963 0.2743482

2 65 healthy 0.13029153 -0.251235648 -1.1507658 0.3096142

3 25 wilted 0.14612901 -0.170267124 -0.7535340 0.4340166

4 74 healthy 0.10260883 -0.288149676 -0.8375206 0.2317196

5 18 wilted 0.05483186 0.059516988 -1.0249783 -53.7256928

6 80 wilted 0.07985435 0.045756804 -1.3962020 7.5734216

7 47 wilted 0.15454129 -0.029567154 -1.4345890 1.8891207

8 24 wilted 0.11759462 -0.271995471 -0.7296435 0.3251888

9 71 healthy 0.10020553 -0.181384162 -1.4309319 0.4048364

10 37 wilted 0.11381952 0.049444260 -1.1052035 3.7268769

11 20 wilted 0.09142250 0.166594498 -1.3738936 -4.5687561

12 26 wilted 0.14345746 0.007804645 -1.0917760 1.7195275

13 3 healthy 0.10069173 -0.158167658 -1.0848951 0.6992264

14 41 wilted 0.11177875 0.012897741 -1.8978005 2.8596823

15 27 wilted 0.09668988 0.048839732 -0.8969843 3.4761651

16 36 wilted 0.14792880 -0.186300667 -0.7084196 0.3644668

17 72 healthy 0.16533571 -0.222465226 -1.4819059 0.4107726

18 31 wilted 0.13276824 0.058621948 -1.4800599 3.5889606

19 45 wilted 0.06276064 -0.058164786 -1.0170161 1.4100601

20 5 healthy 0.12947229 -0.258614958 -1.2550373 0.2623973

21 70 healthy 0.17299827 -0.279709014 -1.4354651 0.3986933

22 34 wilted 0.11236478 -0.015965114 -1.0488215 1.6813047

23 28 wilted 0.16690104 0.009134113 -1.8204254 1.9231470

24 40 wilted 0.12317018 -0.043296886 -0.7492018 0.9707563

25 68 healthy 0.12550657 -0.238273304 -0.8386376 0.3827526

26 33 wilted 0.14595352 -0.119623392 -1.4439231 0.8741458

27 42 wilted 0.09087224 0.100019670 -1.0620017 -29.9002728

28 73 healthy 0.16081598 0.009196845 -1.1312141 2.2696678

29 30 wilted 0.12287255 -0.144575443 -0.7030899 0.5607103

30 43 wilted 0.15791341 -0.005372302 -1.5073174 1.7255063

31 15 wilted 0.09439201 0.038426164 -1.6886927 3.9928701

32 22 wilted 0.09622230 -0.026842737 -1.8046361 1.7472896

33 8 healthy 0.17276311 -0.129882540 -1.1243137 0.7667796

34 79 wilted 0.16581448 0.097763989 -1.0057000 4.8381045

35 4 healthy 0.17353146 -0.158846378 -1.7727770 0.6980030

36 75 healthy 0.19372839 -0.149982883 -2.4120161 0.7065984

37 76 healthy 0.17650050 -0.249396644 -1.5425889 0.4227645

38 58 healthy 0.09594242 -0.238072742 -1.2599388 0.4055777

39 61 healthy 0.17497565 -0.348438178 -0.8327460 0.2617154

40 46 wilted 0.14991261 -0.080920969 -1.5173127 1.1947273

41 59 healthy 0.12253980 -0.228534207 -0.6240105 0.3125564

42 35 wilted 0.20042051 0.007888690 -1.9802223 1.6851630

43 53 healthy 0.11357791 -0.233516246 -0.7266852 0.5174349

44 23 wilted 0.10804655 0.128742778 -3.1489130 -15.9373140

45 69 healthy 0.15353233 -0.215340798 -2.9878736 0.5652307

46 6 healthy 0.15207400 -0.218819608 -2.2077477 0.4821950

47 39 wilted 0.16311398 -0.075256088 -1.1007427 0.4779209

48 2 healthy 0.10824757 -0.072608196 -1.6855475 1.3018684

49 60 healthy 0.11191907 -0.200302031 -1.6484365 0.4100274

50 56 healthy 0.13733091 -0.192621610 -1.0920985 0.5104957

51 62 healthy 0.06286945 -0.066868925 -1.9457173 0.9082508

52 21 wilted 0.08282444 0.150849653 -1.0515823 -2.7751200

53 55 healthy 0.10371600 -0.148771702 -1.7079973 0.6404103

54 64 healthy 0.12946220 -0.116778741 -1.1471938 0.6492517

55 57 healthy 0.10494961 -0.141993366 -0.9767054 0.7876503

56 10 healthy 0.18668031 -0.233596986 -2.5767059 0.4385279

57 48 wilted 0.17448058 0.053204886 -0.9757240 2.8155912

58 54 healthy 0.09640389 -0.197173474 -1.6740870 0.6429254

59 1 healthy 0.14469485 -0.142773261 -1.3169030 0.6935100

60 17 wilted 0.09110226 -0.012808011 -0.6207856 1.86582388.4.9.3 Defining the model

We will use a logistic regression which is a simple model. It may be useful to have a look at this explanation of such a model.

spec_lr <-

logistic_reg() |>

set_engine("glm") |>

set_mode("classification")8.4.9.4 Defining the recipe

The recipe() function to be used here has two arguments:

A formula. Any variable on the left-hand side of the tilde (~) is considered the model outcome (here, outcome). On the right-hand side of the tilde are the predictors. Variables may be listed by name, or you can use the dot (.) to indicate all other variables as predictors.

The data. A recipe is associated with the data set used to create the model. This will typically be the training set, so

data = data_trainhere.

recipe_lr <-

recipe(score ~ ., data_train) |>

add_role(OBJECTID, new_role = "id") |>

step_zv(all_predictors()) |>

step_corr(all_predictors())8.4.9.5 Evaluating model performance

Next, we need to specify what we would like to see for determining the performance of the model. Different modelling algorithms have different types of metrics. Because we have a binary classification problem (healthy vs. wilted classification), we will chose the AUC - ROC evaluation metric here.

8.4.9.6 Combining model and recipe into a workflow

We will want to use our recipe across several steps as we train and test our model. We will:

Process the recipe using the training set: This involves any estimation or calculations based on the training set. For our recipe, the training set will be used to determine which predictors should be converted to dummy variables and which predictors will have zero-variance in the training set, and should be slated for removal.

Apply the recipe to the training set: We create the final predictor set on the training set.

Apply the recipe to the test set: We create the final predictor set on the test set. Nothing is recomputed and no information from the test set is used here; the dummy variable and zero-variance results from the training set are applied to the test set.

To simplify this process, we can use a model workflow, which pairs a model and recipe together. This is a straightforward approach because different recipes are often needed for different models, so when a model and recipe are bundled, it becomes easier to train and test workflows. We’ll use the workflows package from tidymodels to bundle our model with our recipe.

Now we are ready to setup our complete modelling workflow. This workflow contains the model specification and the recipe.

wf_bana_wilt <-

workflow(

spec = spec_lr,

recipe_lr

)

wf_bana_wilt══ Workflow ════════════════════════════════════════════════════════════════════

Preprocessor: Recipe

Model: logistic_reg()

── Preprocessor ────────────────────────────────────────────────────────────────

2 Recipe Steps

• step_zv()

• step_corr()

── Model ───────────────────────────────────────────────────────────────────────

Logistic Regression Model Specification (classification)

Computational engine: glm 8.4.9.7 Fitting the logistic regression model

Now we use the workflow previously created to fit the model on our training data. We use the training partition of the data.

fit_lr <- wf_bana_wilt |>

fit(data = data_train)Let’s check the output:

fit_lr══ Workflow [trained] ══════════════════════════════════════════════════════════

Preprocessor: Recipe

Model: logistic_reg()

── Preprocessor ────────────────────────────────────────────────────────────────

2 Recipe Steps

• step_zv()

• step_corr()

── Model ───────────────────────────────────────────────────────────────────────

Call: stats::glm(formula = ..y ~ ., family = stats::binomial, data = data)

Coefficients:

(Intercept) OBJECTID ndvi ndre cire sipi

7.64191 -0.02527 4.33597 27.50498 3.19965 -0.11597

Degrees of Freedom: 59 Total (i.e. Null); 54 Residual

Null Deviance: 83.18

Residual Deviance: 32.8 AIC: 44.8Now, we will use the fitted model to estimate health status in the training data:

rf_training_pred <-

predict(fit_lr, data_train) |>

bind_cols(predict(fit_lr, data_train, type = "prob")) |>

# Add the true outcome data back in

bind_cols(data_train |>

select(score))What we got?

rf_training_pred# A tibble: 60 × 4

.pred_class .pred_healthy .pred_wilted score

<fct> <dbl> <dbl> <fct>

1 healthy 0.992 0.00816 healthy

2 healthy 0.983 0.0169 healthy

3 wilted 0.378 0.622 wilted

4 healthy 0.988 0.0119 healthy

5 wilted 0.00000607 1.00 wilted

6 wilted 0.132 0.868 wilted

7 wilted 0.182 0.818 wilted

8 healthy 0.910 0.0905 wilted

9 healthy 0.966 0.0345 healthy

10 wilted 0.0100 0.990 wilted

# ℹ 50 more rowsLet’s estimate the training accuracy:

rf_training_pred |> # training set predictions

accuracy(truth = score, .pred_class) -> acc_train

acc_train# A tibble: 1 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 accuracy binary 0.85The accuracy of the model on the training data is 0.85 which is above 0.5 (mere chance). This basically means that the model was able to learn predictive patterns from the training data. To see if the model is able to generalize what it learned when exposed to new data, we evaluate the model on our hold-out (or so-called test data). We created a test dataset when splitting the data at the start of the modelling.

8.4.9.8 Evaluating the model on test data

Now, we will use the fitted model to estimate health status in the testing data:

lr_testing_pred <-

predict(fit_lr, data_test) |>

bind_cols(predict(fit_lr, data_test, type = "prob")) |>

bind_cols(data_test |> select(score))What we got:

lr_testing_pred# A tibble: 20 × 4

.pred_class .pred_healthy .pred_wilted score

<fct> <dbl> <dbl> <fct>

1 healthy 0.656 0.344 healthy

2 wilted 0.0587 0.941 healthy

3 healthy 0.870 0.130 healthy

4 wilted 0.251 0.749 healthy

5 healthy 1.00 0.0000730 healthy

6 wilted 0.0425 0.957 wilted

7 wilted 0.0171 0.983 wilted

8 wilted 0.000527 0.999 wilted

9 wilted 0.0207 0.979 wilted

10 healthy 0.513 0.487 wilted

11 wilted 0.00174 0.998 wilted

12 wilted 0.0294 0.971 wilted

13 wilted 0.0341 0.966 wilted

14 wilted 0.414 0.586 healthy

15 healthy 0.992 0.00792 healthy

16 healthy 0.880 0.120 healthy

17 healthy 0.999 0.00142 healthy

18 healthy 0.976 0.0242 healthy

19 wilted 0.112 0.888 wilted

20 wilted 0.0713 0.929 wilted Let’s compute the testing accuracy:

lr_testing_pred |> # test set predictions

accuracy(score, .pred_class)# A tibble: 1 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 accuracy binary 0.8The resulting accuracy is similar to the accuracy on the training data. It is good for a first go and a relatively simple classification model.

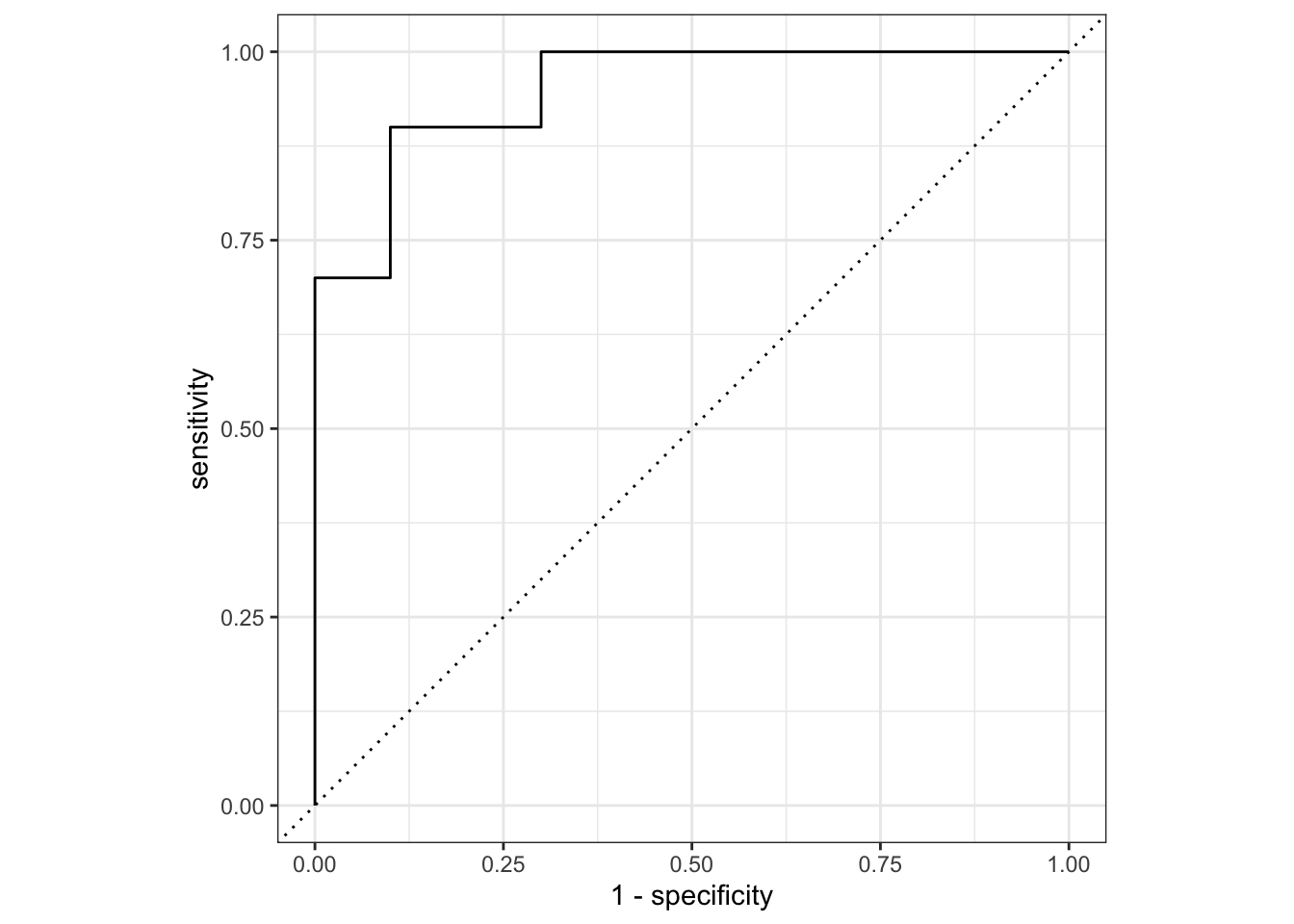

## Let's plot the AUC-ROC

lr_testing_pred |>

roc_curve(truth = score, .pred_wilted, event_level="second") |>

mutate(model = "Logistic Regression") |>

autoplot()

8.4.10 Conclusions

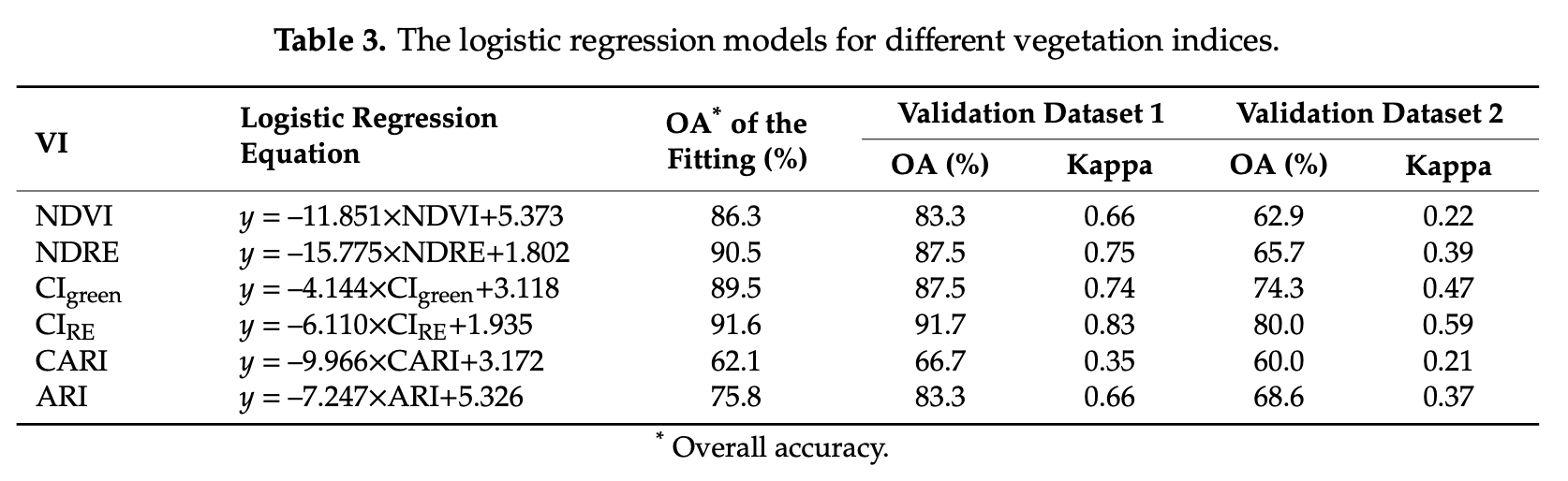

In this section, we trained and tested a logistic regression model (LGM) using four spectral indices as predictor variables (i.e. NDVI, NDRE, CIRE and SIPI). Compare this section results, in terms of equation and accuracy, with the individual LGMs tested by [Ye et al. (2020)]Figure 8.12.

Note that we have not tested other ML algorithms. But there are a lot of them available from the tidymodels framework (e.g. random forests, support vector machines, gradient boosting machines).

To conclude, this section illustrated how to use VIs derived from UAV-based multispectral imagery and ground data to develop an identification model for detecting banana Fusarium wilt. The results showed that a simple logistic regression model is able to identify Fusarium wilt of banana from several VIs with a good accuracy. However, before going too optimistic, I would suggest to study the Ye et al. (2020) paper and critically evaluate their experiment design, methods and results.

8.5 Disease quantification

8.5.1 Introduction

This section illustrates the use of UAV multispecral imagery for estimating the severity of cercospora leaf spot (CLS) disease in table beet, the most destructive fungal disease of table beet (Skaracis et al. 2010; Tan et al. 2023). The CLS disease causes rapid defoliation and significant crop loss may occur through the inability to harvest with top-pulling machinery. It may also render the produce unsaleable for the fresh market (Skaracis et al. 2010).

Saif et al. (2024) recently published on Mendeley data UAV imagery & ground truth CLS data collected a several table beef plots at Cornell AgriTech, Geneva, New York, USA. Note that, in this study, UAV multispectral images were collected using a Micasense Red Edge camera, similar to the one used in the case study for the detection of Fusarium wilt on banana. I have not found any scientific paper estimating CLS severity from this dataset. However, in Saif et al. (2023), a similar dataset was used to forecast table beet root yield at Cornell Agritech. While it is just a guess, it is worth visualizing the plots in the latter study.

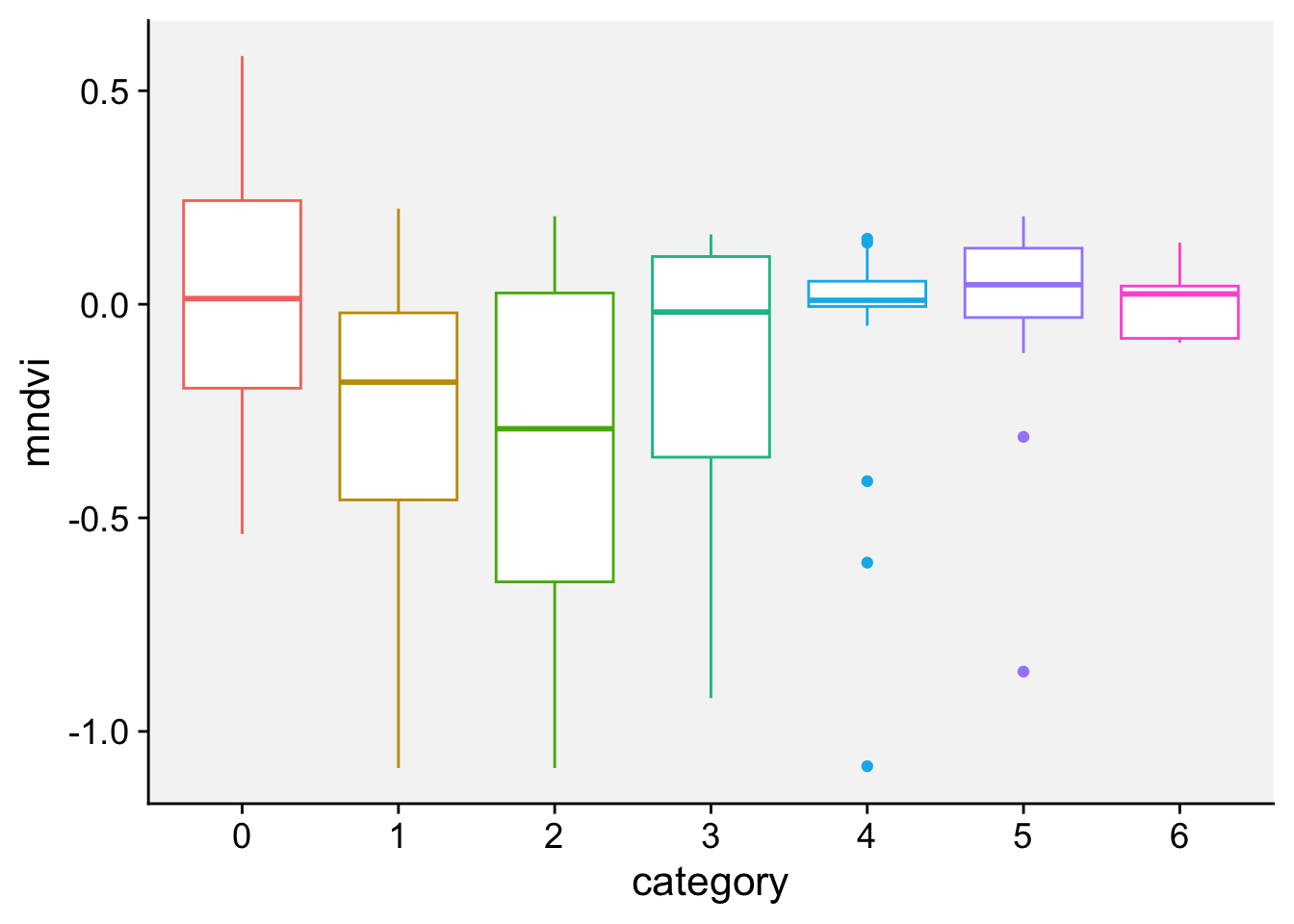

This section aims at estimating CLS leaf severity using vegetation indices (VIs) derived from the multispectral UAV imagery as spectral covariates. The section comprises two parts: (i) Part 1 creates a spectral covariate table to summarize the complete UAV imagery & ground truth data; and (ii) Part 2 trains and tests a machine learning model to estimate CLS severity.

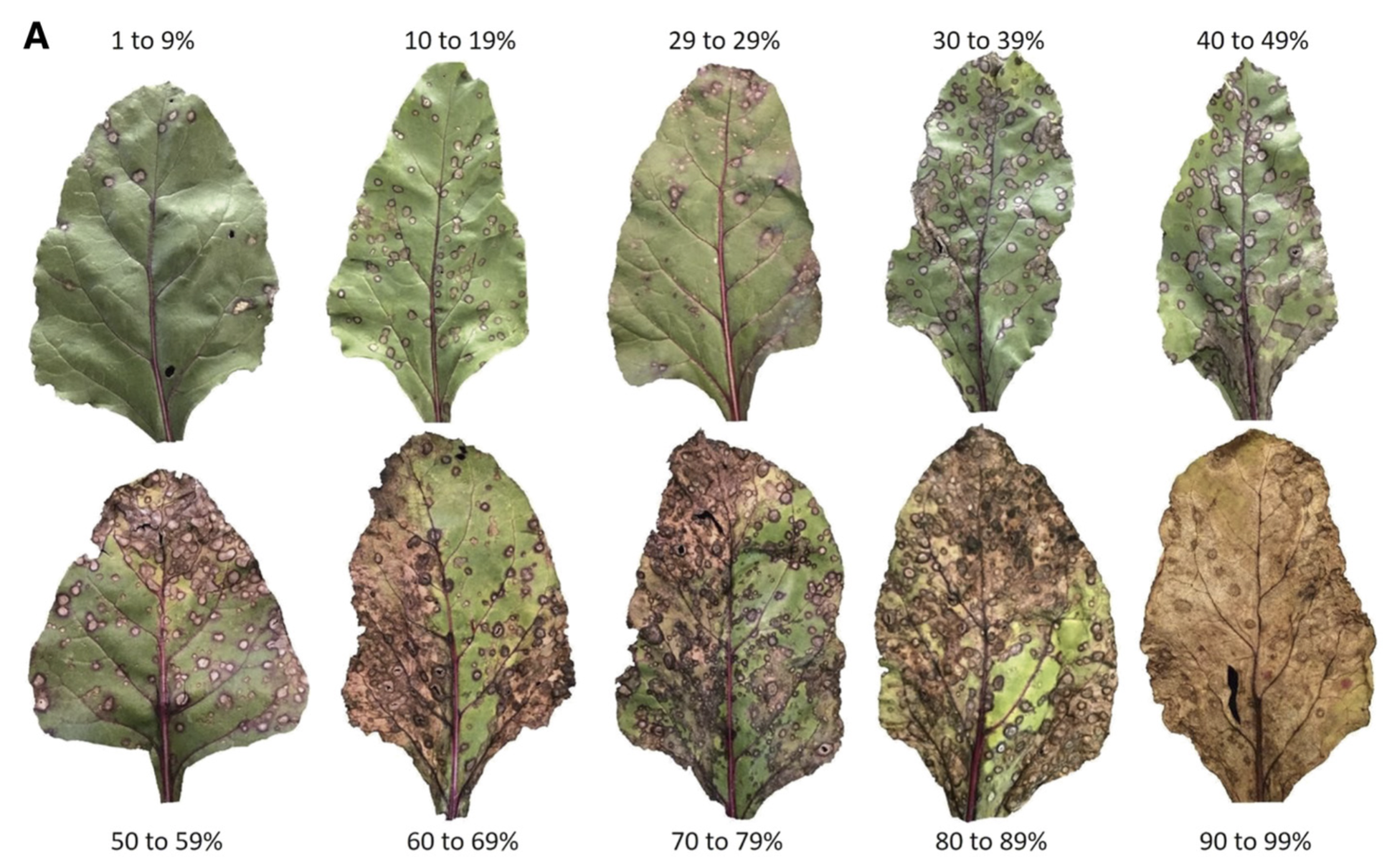

8.5.2 CLS severity on leaves

It is very important to visualize how different levels of table beet CLS disease severity look in RGB color leaf images. The figure below is a standard area diagram set (SADs) that is used by raters during the assessment of visual severity to increase their accuracy and reliability of the estimates.

8.5.3 Software setup

Let’s start by cleaning up R memory:

rm(list=ls())Then, we need to install several packages (if they are not installed yet):

list.of.packages <- c("readr","terra", "tidyterra", "stars", "sf", "leaflet", "leafem", "dplyr", "ggplot2", "tidymodels")

new.packages <- list.of.packages[!(list.of.packages %in% installed.packages()[,"Package"])]

if(length(new.packages)) install.packages(new.packages)Now, let’s load all the required packages:

library(readr)

library(terra)

library(tidyterra)

library(stars)

library(sf)

library(leaflet)

library(leafem)

library(dplyr)

library(ggplot2)

library(tidymodels)8.5.4 Read the dataset

The following code assumes you have already downloaded the dataset and unzipped its content under the data/cercospora_data directory. What files are in that folder?

list.files("data/cercospora_data") [1] "CLS_DS.csv" "D0_2021.csv" "D1_2021.csv"

[4] "D2_2021.csv" "D3_2021.csv" "D4_2021.csv"

[7] "fcovar_2021.csv" "multispec_2021_2022" "multispec_2023"

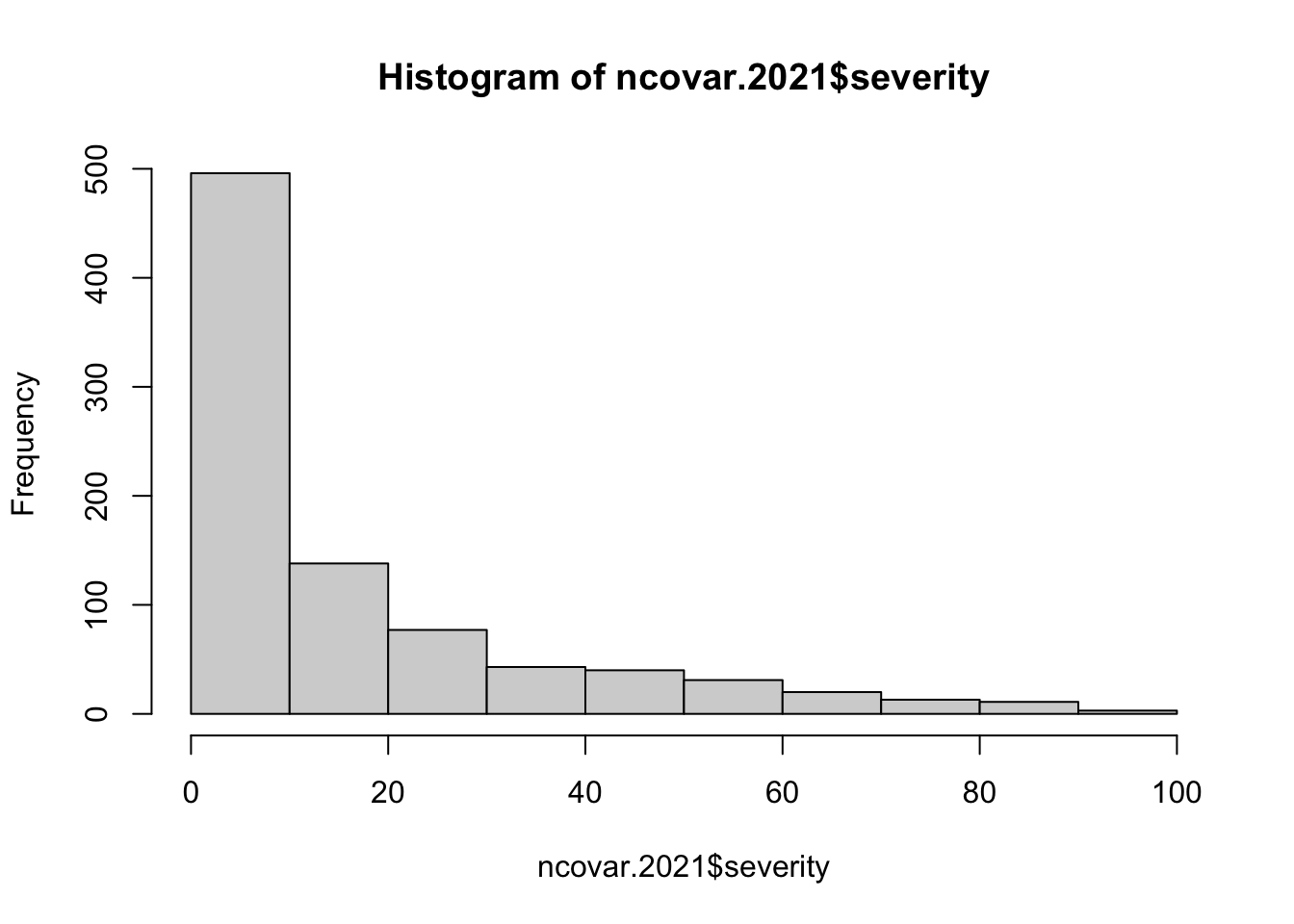

[10] "ncovar_2021.csv" "README.md" Note that there is one CLS_DS.csv file with the CLS ground data and two folders with the UAV multispectral images. We can infer that 2021, 2022, 2023 refer to the image acquisition years. At this point, it is very important to check the README file. I will summarize the following points:

8.5.4.1 File naming convention

Each image file is named according to the plot number and the date of capture, using the format plt_rYYYYMMDD, where:

- ‘plt’ stands for the plot number.

- ‘YYYYMMDD’ represents the date on which the image was captured (year, month, day).

For example, the file name 5_r20210715 corresponds to an image taken on July 15, 2021, from study plot 5.

8.5.4.2 CLS Severity Data

File named CLS_DS contain visual assessments of CLS disease severity noted for each plot.

8.5.5 Inspect the format of each file

Let’s list the first images under an image folder:

list.files("data/cercospora_data/multispec_2021_2022/")[1:15] [1] "1_r20210707.tif" "1_r20210707.tif.aux.xml"

[3] "1_r20210715.tif" "1_r20210720.tif"

[5] "1_r20210802.tif" "1_r20210825.tif"

[7] "1_r20210825.tif.aux.xml" "1_r20220707.tif"

[9] "1_r20220715.tif" "1_r20220726.tif"

[11] "1_r20220810.tif" "1_r20220818.tif"

[13] "10_r20210707.tif" "10_r20210715.tif"

[15] "10_r20210720.tif" Note that, for 2021, there are six dates of image acquisition: 20210707, 20210715, 20210720,20210726, 20210802,20210825

8.5.6 Read the orthomosaics

Now, let’s read several plot images using the terra package:

# Open a tif collected on study plot 5 on 7th July 2021

tif1 <- "data/cercospora_data/multispec_2021_2022/1_r20210707.tif"

tif2 <- "data/cercospora_data/multispec_2021_2022/2_r20210707.tif"

tif3 <- "data/cercospora_data/multispec_2021_2022/3_r20210707.tif"

tif4 <- "data/cercospora_data/multispec_2021_2022/4_r20210707.tif"

tif5 <- "data/cercospora_data/multispec_2021_2022/5_r20210707.tif"

tif6 <- "data/cercospora_data/multispec_2021_2022/6_r20210707.tif"

tif7 <- "data/cercospora_data/multispec_2021_2022/7_r20210707.tif"

tif8 <- "data/cercospora_data/multispec_2021_2022/8_r20210707.tif"

tif9 <- "data/cercospora_data/multispec_2021_2022/9_r20210707.tif"

tif10 <- "data/cercospora_data/multispec_2021_2022/10_r20210707.tif"It may be convenient to increase image pixel size (to make the raster lighter):

p01 <- terra::rast(tif1) %>% aggregate(20)

p02 <- terra::rast(tif2) %>% aggregate(20)

p03 <- terra::rast(tif3) %>% aggregate(20)

p04 <- terra::rast(tif4) %>% aggregate(20)

p05 <- terra::rast(tif5) %>% aggregate(20)

p06 <- terra::rast(tif6) %>% aggregate(20)

p07 <- terra::rast(tif7) %>% aggregate(20)

p08 <- terra::rast(tif8) %>% aggregate(20)

p09 <- terra::rast(tif9) %>% aggregate(20)

p10 <- terra::rast(tif10) %>% aggregate(20)Let’s check what we get:

p01class : SpatRaster

dimensions : 15, 9, 5 (nrow, ncol, nlyr)

resolution : 0.212, 0.212 (x, y)

extent : 334196.9, 334198.8, 4748990, 4748993 (xmin, xmax, ymin, ymax)

coord. ref. : WGS 84 / UTM zone 18N (EPSG:32618)

source(s) : memory

names : 1_r20210707_1, 1_r20210707_2, 1_r20210707_3, 1_r20210707_4, 1_r20210707_5

min values : 0.01288329, 0.02743819, 0.01721323, 0.06647335, 0.1167282

max values : 0.03016389, 0.06923942, 0.07651012, 0.18791453, 0.4155874 Note that each image has 5 bands with ~21 cm pixel size.

As the images have been split in plots, it may be useful to “mosaic” them.

# with many SpatRasters, make a SpatRasterCollection from a list

rlist <- list(p01, p02, p03, p04, p05, p06, p07, p08, p09, p10)

rsrc <- sprc(rlist)

m <- merge(rsrc)What we got?

mclass : SpatRaster

dimensions : 209, 24, 5 (nrow, ncol, nlyr)

resolution : 0.212, 0.212 (x, y)

extent : 334193.8, 334198.9, 4748990, 4749035 (xmin, xmax, ymin, ymax)

coord. ref. : WGS 84 / UTM zone 18N (EPSG:32618)

source(s) : memory

names : 1_r20210707_1, 1_r20210707_2, 1_r20210707_3, 1_r20210707_4, 1_r20210707_5

min values : 0.01390121, 0.02954650, 0.01960831, 0.0706145, 0.1283448

max values : 0.03741143, 0.08793678, 0.08386827, 0.2208381, 0.5030925 Let’s know band names:

names(m)[1] "1_r20210707_1" "1_r20210707_2" "1_r20210707_3" "1_r20210707_4"

[5] "1_r20210707_5"We will rename band names:

# Rename

m2 <- m %>%

rename(blue = "1_r20210707_1", green = "1_r20210707_2",

red = "1_r20210707_3", redge= "1_r20210707_4",

nir = "1_r20210707_5")Let’s get a summary of the mosaic:

summary(m2) blue green red redge

Min. :0.014 Min. :0.030 Min. :0.020 Min. :0.071

1st Qu.:0.019 1st Qu.:0.052 1st Qu.:0.029 1st Qu.:0.113

Median :0.022 Median :0.058 Median :0.041 Median :0.138

Mean :0.023 Mean :0.058 Mean :0.043 Mean :0.139

3rd Qu.:0.026 3rd Qu.:0.064 3rd Qu.:0.056 3rd Qu.:0.164

Max. :0.037 Max. :0.088 Max. :0.084 Max. :0.221

NA's :3896 NA's :3896 NA's :3896 NA's :3896

nir

Min. :0.128

1st Qu.:0.216

Median :0.291

Mean :0.298

3rd Qu.:0.377

Max. :0.503

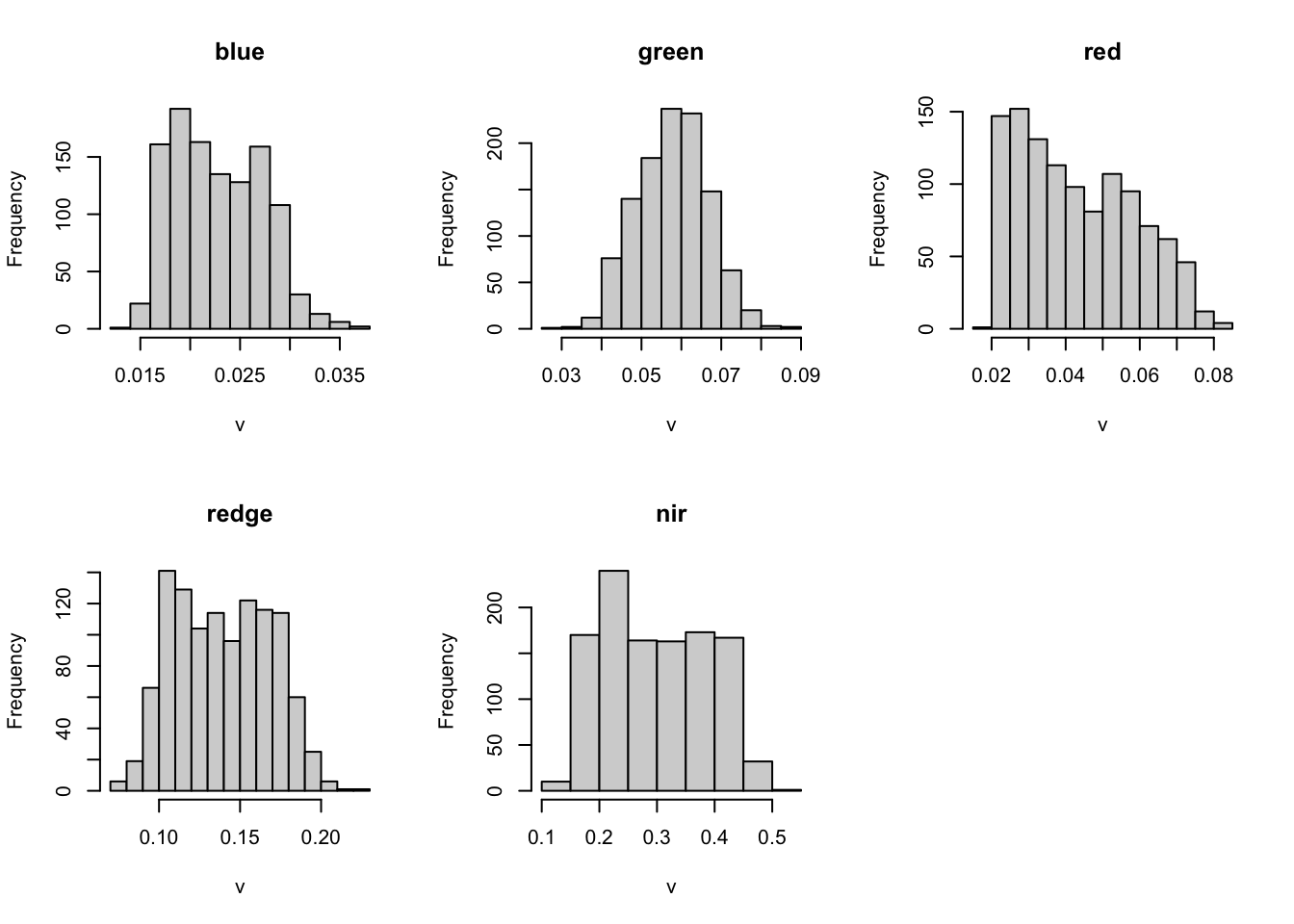

NA's :3896 hist(m2)

Let’s visualize the mosaic:

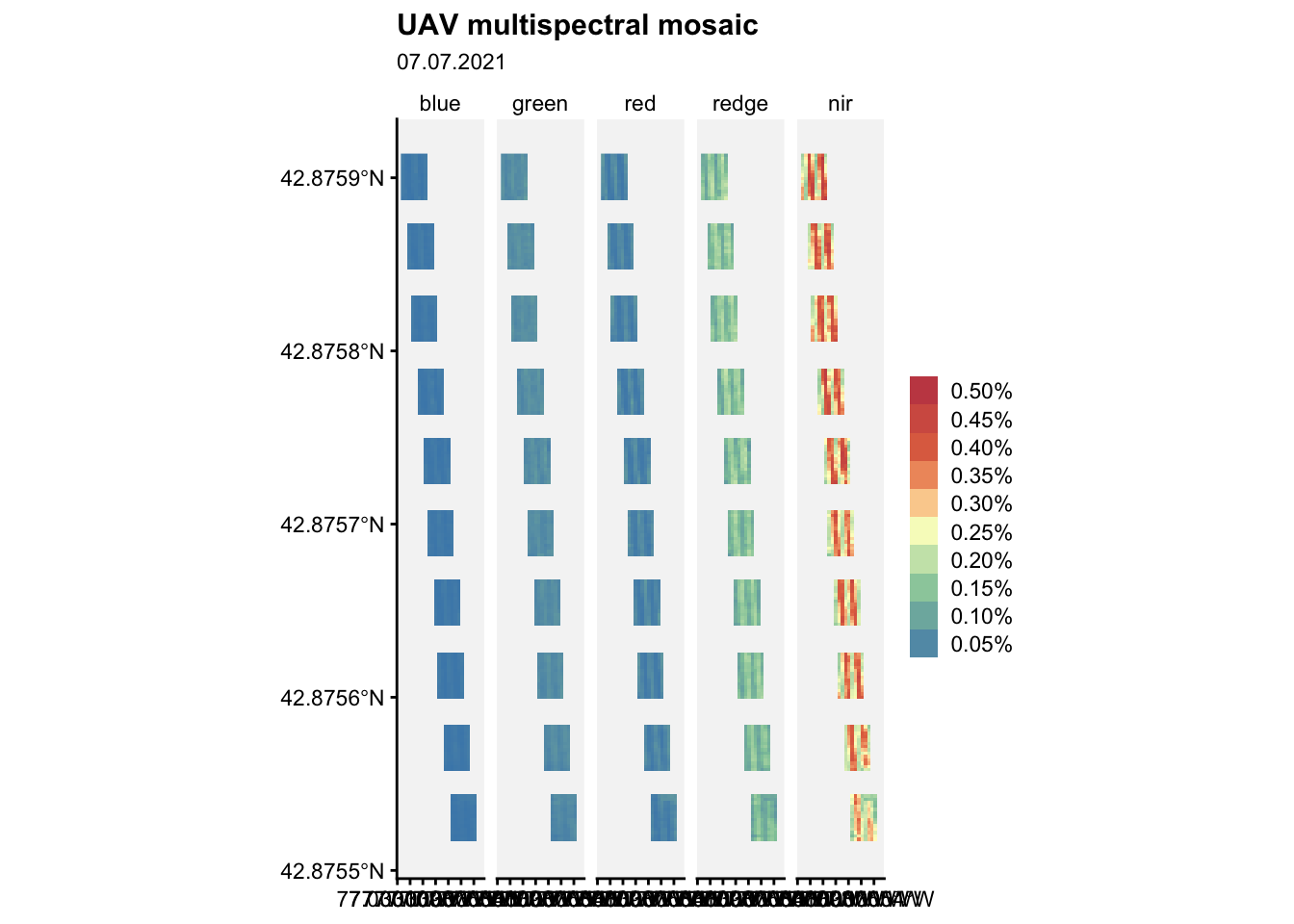

ggplot() +

geom_spatraster(data = m2) +

facet_wrap(~lyr, ncol = 5) +

r4pde::theme_r4pde(font_size = 10)+

scale_fill_whitebox_c(

palette = "muted",

labels = scales::label_number(suffix = "%"),

n.breaks = 10,

guide = guide_legend(reverse = TRUE)

) +

labs(

fill = "",

title = "UAV multispectral mosaic",

subtitle = "07.07.2021"

)Warning: replacing previous import 'car::recode' by 'dplyr::recode' when

loading 'r4pde'

Let’s read all images collected at a later date:

# Open all images collected on 25th August 2021

tif1 <- "data/cercospora_data/multispec_2021_2022/1_r20210825.tif"

tif2 <- "data/cercospora_data/multispec_2021_2022/2_r20210825.tif"

tif3 <- "data/cercospora_data/multispec_2021_2022/3_r20210825.tif"

tif4 <- "data/cercospora_data/multispec_2021_2022/4_r20210825.tif"

tif5 <- "data/cercospora_data/multispec_2021_2022/5_r20210825.tif"

tif6 <- "data/cercospora_data/multispec_2021_2022/6_r20210825.tif"

tif7 <- "data/cercospora_data/multispec_2021_2022/7_r20210825.tif"

tif8 <- "data/cercospora_data/multispec_2021_2022/8_r20210825.tif"

tif9 <- "data/cercospora_data/multispec_2021_2022/9_r20210825.tif"

tif10 <- "data/cercospora_data/multispec_2021_2022/10_r20210825.tif"p01 <- terra::rast(tif1) %>% aggregate(20)

p02 <- terra::rast(tif2) %>% aggregate(20)

p03 <- terra::rast(tif3) %>% aggregate(20)

p04 <- terra::rast(tif4) %>% aggregate(20)

p05 <- terra::rast(tif5) %>% aggregate(20)

p06 <- terra::rast(tif6) %>% aggregate(20)

p07 <- terra::rast(tif7) %>% aggregate(20)

p08 <- terra::rast(tif8) %>% aggregate(20)

p09 <- terra::rast(tif9) %>% aggregate(20)

p10 <- terra::rast(tif10) %>% aggregate(20)# with many SpatRasters, make a SpatRasterCollection from a list

rlist <- list(p01, p02, p03, p04, p05, p06, p07, p08, p09, p10)

rsrc <- sprc(rlist)

mm <- merge(rsrc)names(mm)[1] "1_r20210825_1" "1_r20210825_2" "1_r20210825_3" "1_r20210825_4"

[5] "1_r20210825_5"# Rename

mm2 <- mm %>%

rename(blue = "1_r20210825_1", green = "1_r20210825_2",

red = "1_r20210825_3", redge= "1_r20210825_4",

nir = "1_r20210825_5")Now, a visualization task:

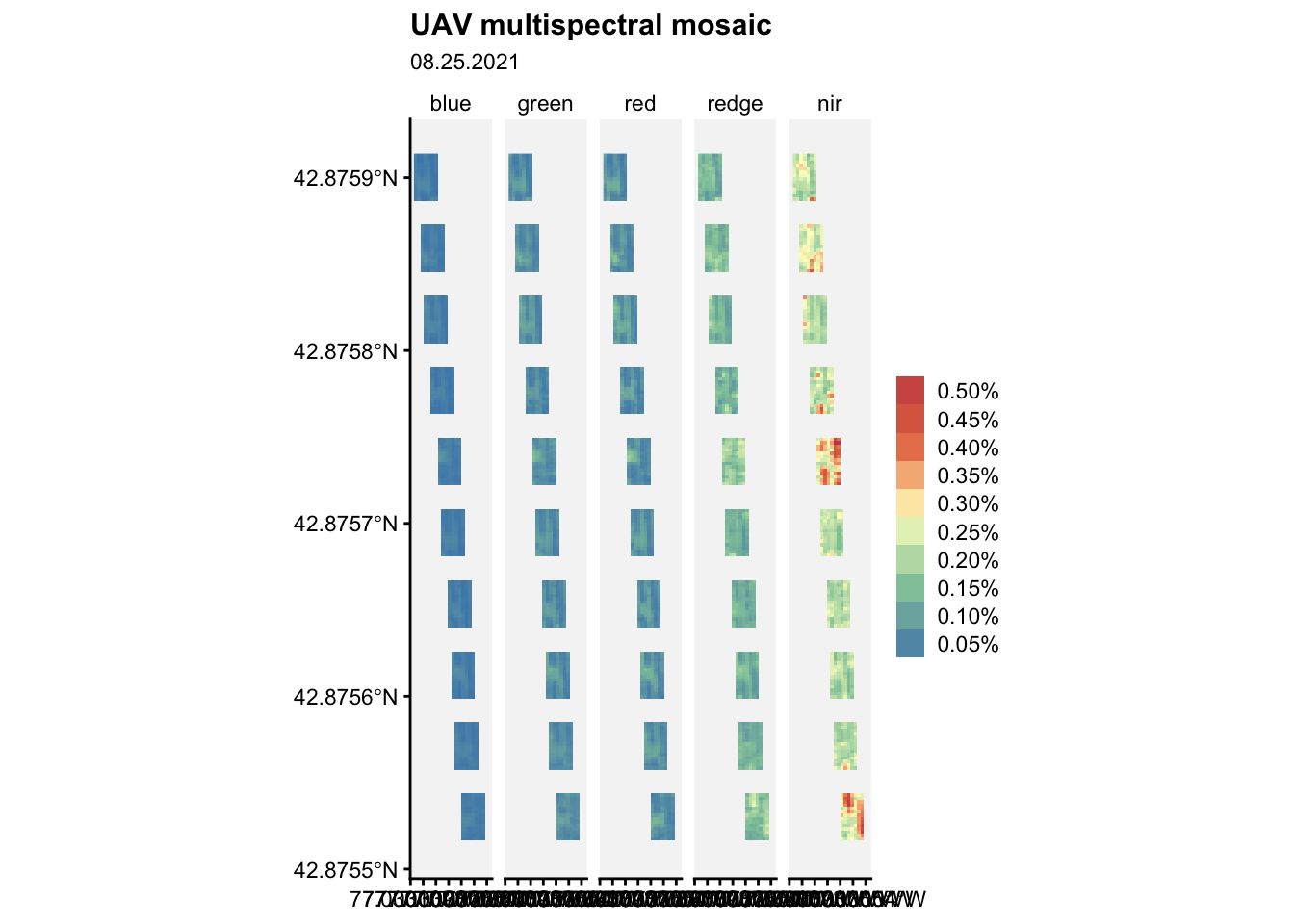

ggplot() +

geom_spatraster(data = mm2) +

facet_wrap(~lyr, ncol = 5) +

r4pde::theme_r4pde(font_size = 10)+

scale_fill_whitebox_c(

palette = "muted",

labels = scales::label_number(suffix = "%"),

n.breaks = 10,

guide = guide_legend(reverse = TRUE)

) +

labs(

fill = "",

title = "UAV multispectral mosaic",

subtitle = "08.25.2021"

)

8.5.7 Reading severity data

Now, let’s read the ground CLS data:

file <- "data/cercospora_data/CLS_DS.csv"

cls <- readr::read_csv(file, col_names = TRUE, show_col_types = FALSE)What we got?

cls# A tibble: 136 × 9

Plot D0 D1 D2 D3 D4 D5 D6 year

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1 0 0.25 0.9 4.58 8.75 21 NA 2021

2 2 0 0.75 3.68 12.4 20.8 45.4 NA 2021

3 3 0 2.92 7.38 18 34.6 65.2 NA 2021

4 4 0 0.75 5.45 17.4 42.6 75.5 NA 2021

5 5 0 3.38 3.45 15.4 17.2 55.2 NA 2021

6 6 0 0.8 1.38 8.8 12.2 15.4 NA 2021

7 7 0 0.375 3.05 9.1 13 31.0 NA 2021

8 8 0 3.7 8.4 29.4 41.2 71.8 NA 2021

9 9 0 2.38 4.28 13.2 15.6 38.1 NA 2021

10 10 0 2.22 2.28 30.2 17.3 68 NA 2021

# ℹ 126 more rowsNote that columns D0 to D6 refer to the disease severity recorded in percentage at different dates for each study plot. Note also that this object is structured in wide format (i.e. each row represents a single plot for each year).

unique(cls$Plot) [1] 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25

[26] 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50

[51] 51 52 53 54 55 56Let’s know the values stored in the year attribute:

unique(cls$year)[1] 2021 2022 20238.5.8 Preparing a set of spectral covariables per plot & date

The 2021 data collection dates are 20210707, 20210715, 20210720, 20210802,and 20210825.

Let’s start creating a list of images per date for 2021:

# Create a list .tif files collected at each date:

# These are the DXfiles

D0files <- Sys.glob("data/cercospora_data/multispec_2021_2022/*_r20210707.tif")

D1files <- Sys.glob("data/cercospora_data/multispec_2021_2022/*_r20210715.tif")

D2files <- Sys.glob("data/cercospora_data/multispec_2021_2022/*_r20210720.tif")

D3files <- Sys.glob("data/cercospora_data/multispec_2021_2022/*_r20210802.tif")

D4files <- Sys.glob("data/cercospora_data/multispec_2021_2022/*_r20210825.tif")Now, define date variables:

# These are the DX variables

(D0 <- as.Date('7/7/2021',format='%m/%d/%Y'))[1] "2021-07-07"(D1 <- as.Date('7/15/2021',format='%m/%d/%Y'))[1] "2021-07-15"(D2 <- as.Date('7/20/2021',format='%m/%d/%Y'))[1] "2021-07-20"(D3 <- as.Date('8/02/2021',format='%m/%d/%Y'))[1] "2021-08-02"(D4 <- as.Date('8/25/2021',format='%m/%d/%Y'))[1] "2021-08-25"The following block of code should be executed five times (one per each DXfiles & each DX variable).

The code reads each image included in a given DXfiles, computes several vegetation indices (VIs) for such image, and obtain the average value of original bands & VIs per each. All values are stored in a vector object.

# Read all files for each date

lista <- lapply(D0files, function(x) rast(x))

# Compute global statistics

output <- vector("double", 5) # 1. output

for (i in seq_along(lista)) { # 2. sequence

img <- lista[[i]]

r <- clamp(img, 0, 1)

ndvi <- (r[[5]]-r[[3]])/(r[[5]]+r[[3]])

ndre = (r[[5]]-r[[4]]) / (r[[5]]+r[[4]])

cire = (r[[5]]) / (r[[4]]-1)

sipi = (r[[5]] - r[[1]]) / (r[[4]]-r[[3]])

min <- minmax(ndvi)[1]

max <- minmax(ndvi)[2]

avg <- global(ndvi, fun="mean", na.rm=TRUE)[,1]

#dev <- global(ndvi, fun="std")

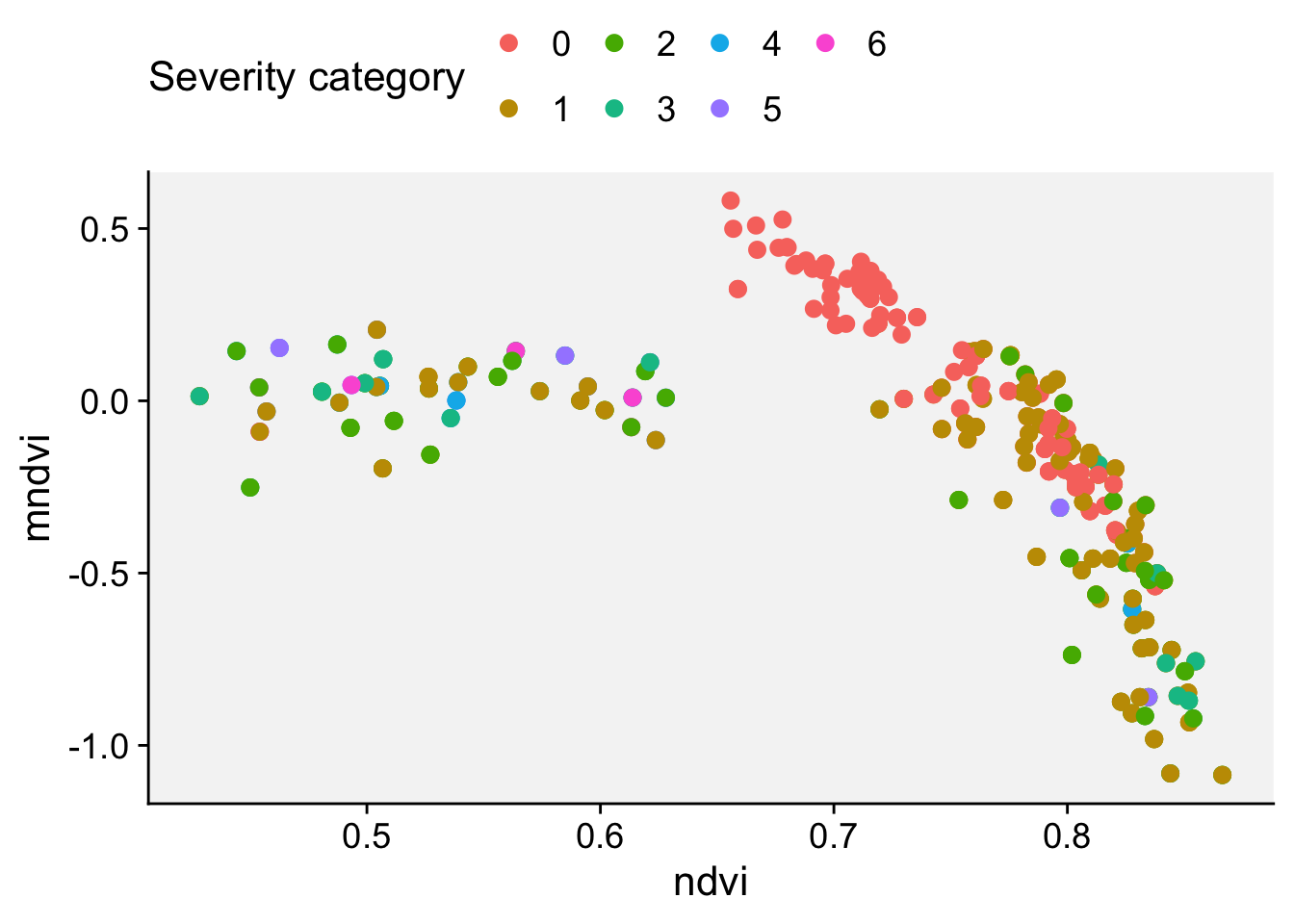

#mndvi <- (ndvi-min)/(max-min)

mndvi <- 2 + log((ndvi-avg)/(max-min))

nimg <- c(r,ndvi, ndre, cire, sipi, mndvi)

output[i] <- global(nimg, fun="mean", na.rm=TRUE)

#output[i] <- global(nimg, mean, na.rm=TRUE)

}The following block of code combine all vectors produced previously into a matrix as a previous step to put all data into a dataframe with meaningful colummn names. It also add colummns storing each plot ID as well as the corresponding data. Note the the code also needs to be executed five times (one per each DX variable).

#combine all vectors into a matrix

mat <- do.call("rbind",output) #combine all vectors into a matrix

#convert matrix to data frame

df <- as.data.frame(mat)

#specify column names

colnames(df) <- c('blue', 'green', 'red', 'redge', 'nir', 'ndvi',

'ndre', 'cire', 'sipi', 'mndvi')

date = D0

plot <- seq(1:40)

date <- rep(date,40)

newdf <- cbind(df,plot,date)What we got?

newdf blue green red redge nir ndvi ndre

1 0.02343657 0.05959132 0.04362133 0.1444027 0.3131252 0.6988392 0.3515343

2 0.02282100 0.05970168 0.04427105 0.1432050 0.3003496 0.6909051 0.3404127

3 0.02229088 0.06034372 0.04296553 0.1441267 0.3037592 0.6985894 0.3424855

4 0.02211413 0.06289556 0.04265936 0.1493579 0.3175088 0.7124582 0.3477512

5 0.02311490 0.05985614 0.04618947 0.1392655 0.2849417 0.6763379 0.3314924

6 0.02237584 0.06117992 0.04150024 0.1462904 0.3117826 0.7198424 0.3504247

7 0.02086133 0.05870957 0.03996187 0.1412853 0.3017970 0.7235433 0.3521370

8 0.02132975 0.05750314 0.03989218 0.1370614 0.2956135 0.7157179 0.3550798

9 0.02188219 0.05869401 0.04093412 0.1413798 0.3023368 0.7115535 0.3503842

10 0.02260319 0.05975366 0.04357307 0.1429067 0.2992025 0.6962978 0.3402171

11 0.02089238 0.04880216 0.04060995 0.1215226 0.2446871 0.6569093 0.3221139

12 0.02350888 0.05890453 0.04759297 0.1368886 0.2807072 0.6557864 0.3276572

13 0.02218707 0.05638867 0.04349103 0.1341888 0.2819767 0.6780262 0.3441717

14 0.02275558 0.05684062 0.04544145 0.1339135 0.2753768 0.6666547 0.3337416

15 0.02272742 0.05738843 0.04389310 0.1379283 0.2873050 0.6838893 0.3418451

16 0.02140498 0.05666796 0.04005207 0.1370657 0.2937609 0.7116084 0.3544429

17 0.02086186 0.05736772 0.03807191 0.1398500 0.2991122 0.7290118 0.3560801

18 0.02015755 0.05423116 0.03848824 0.1311203 0.2787107 0.7109047 0.3537186

19 0.02193068 0.05637152 0.03996171 0.1306193 0.2834647 0.7008749 0.3578544

20 0.02214865 0.05725453 0.04177672 0.1331511 0.2830351 0.6914292 0.3488766

21 0.02122350 0.05473366 0.04193676 0.1295753 0.2737450 0.6830712 0.3463346

22 0.02200368 0.05443210 0.04510647 0.1327359 0.2787727 0.6671694 0.3387558

23 0.02334062 0.05691194 0.04427723 0.1309551 0.2608062 0.6588717 0.3212313

24 0.02211155 0.06021804 0.03976967 0.1403651 0.3081556 0.7156574 0.3615820

25 0.02174683 0.05562259 0.03816646 0.1312835 0.2858236 0.7057869 0.3560048

26 0.02082576 0.05881460 0.03393639 0.1407333 0.3136037 0.7548543 0.3704691

27 0.02192849 0.06039847 0.03532052 0.1438516 0.3201219 0.7514753 0.3694975

28 0.02302246 0.06453867 0.03590782 0.1454413 0.3360538 0.7627826 0.3862154

29 0.02060561 0.05634195 0.03435760 0.1348848 0.3139023 0.7577029 0.3915793

30 0.02453648 0.05776692 0.04101824 0.1400822 0.3165895 0.7123657 0.3729309

31 0.02449699 0.06516875 0.04320010 0.1488943 0.3369249 0.7188490 0.3734588

32 0.02375349 0.06403329 0.04276755 0.1462964 0.3370367 0.7210620 0.3804087

33 0.02255618 0.05711345 0.04411843 0.1383896 0.2971490 0.6880898 0.3481446

34 0.02217878 0.05772793 0.04388026 0.1337953 0.2885867 0.6798194 0.3490214

35 0.02187461 0.05617408 0.04287472 0.1365350 0.2917336 0.6953013 0.3481033

36 0.02296074 0.05817811 0.04563811 0.1391187 0.2921268 0.6801861 0.3393280

37 0.02267559 0.05957335 0.04213828 0.1420391 0.3082079 0.7142905 0.3568940

38 0.02360669 0.05999425 0.04294853 0.1433218 0.3103642 0.7052194 0.3528355

39 0.02364231 0.06191251 0.04298429 0.1483199 0.3220375 0.7163665 0.3560244

40 0.02427710 0.06139374 0.04394927 0.1444968 0.3085851 0.6985304 0.3476681

cire sipi mndvi plot date

1 -0.3789603 3.641733 0.33578281 1 2021-07-07

2 -0.3623515 3.550125 0.38310911 2 2021-07-07

3 -0.3674174 3.484420 0.30046194 3 2021-07-07

4 -0.3865963 3.431203 0.36371401 4 2021-07-07

5 -0.3408283 3.558089 0.44423685 5 2021-07-07

6 -0.3768460 3.376905 0.24863497 6 2021-07-07

7 -0.3618540 3.407052 0.30100962 7 2021-07-07

8 -0.3531518 3.494425 0.34119340 8 2021-07-07

9 -0.3637379 3.481473 0.32563500 9 2021-07-07

10 -0.3605456 3.516463 0.39810832 10 2021-07-07

11 -0.2870937 3.673143 0.49929140 11 2021-07-07

12 -0.3354612 3.761214 0.58121969 12 2021-07-07

13 -0.3377332 3.773541 0.52614654 13 2021-07-07

14 -0.3282154 3.695583 0.50885882 14 2021-07-07

15 -0.3454207 3.635421 0.39704537 15 2021-07-07

16 -0.3519775 3.536311 0.40365150 16 2021-07-07

17 -0.3597412 3.381570 0.19228050 17 2021-07-07

18 -0.3315227 3.570230 0.37412852 18 2021-07-07

19 -0.3374564 3.779183 0.21921958 19 2021-07-07

20 -0.3374060 3.622386 0.26701154 20 2021-07-07

21 -0.3250037 3.703904 0.39212223 21 2021-07-07

22 -0.3321942 3.766054 0.43835034 22 2021-07-07

23 -0.3098876 3.691888 0.32447853 23 2021-07-07

24 -0.3720515 3.512840 0.37734386 24 2021-07-07

25 -0.3404875 3.509041 0.35422784 25 2021-07-07

26 -0.3779853 3.204817 0.14699663 26 2021-07-07

27 -0.3867478 3.270867 0.08401903 27 2021-07-07

28 -0.4060356 3.304020 0.01329941 28 2021-07-07

29 -0.3747234 3.590456 0.09783340 29 2021-07-07

30 -0.3813865 4.120632 0.31838109 30 2021-07-07

31 -0.4099608 3.819633 0.35157164 31 2021-07-07

32 -0.4091370 3.878319 0.33094172 32 2021-07-07

33 -0.3558508 3.668159 0.40724176 33 2021-07-07

34 -0.3443164 4.378597 0.44631347 34 2021-07-07

35 -0.3486557 3.563385 0.37868256 35 2021-07-07

36 -0.3494958 3.645456 0.44505296 36 2021-07-07

37 -0.3711740 3.527677 0.30651449 37 2021-07-07

38 -0.3742929 3.591207 0.22357258 38 2021-07-07

39 -0.3906997 3.483071 0.21173498 39 2021-07-07

40 -0.3740427 3.568325 0.26238290 40 2021-07-07The following block of code writes to disk a DX_2021.csv file. Note that the append parameter is set to FALSE in order to overwrite any existing data.

write_csv(

newdf,

"data/cercospora_data/D0_2021.csv",

na = "NA",

col_names = TRUE,

append = FALSE

)8.5.9 Put all covariate data in a single object

Now, we will read all the recently created csv files and put all covariate data values into a single object.

Next code obtains a list with the path of every csv file matching the D*_2021.csv expression:

(csv_files <- Sys.glob("data/cercospora_data/D*_2021.csv"))[1] "data/cercospora_data/D0_2021.csv" "data/cercospora_data/D1_2021.csv"

[3] "data/cercospora_data/D2_2021.csv" "data/cercospora_data/D3_2021.csv"

[5] "data/cercospora_data/D4_2021.csv"Now, we will read all files in the list:

(df.2021 <- do.call(rbind,lapply(csv_files,read.csv))) blue green red redge nir ndvi ndre

1 0.02343657 0.05959132 0.04362133 0.1444027 0.3131252 0.6988392 0.3515343

2 0.02282100 0.05970168 0.04427105 0.1432050 0.3003496 0.6909051 0.3404127

3 0.02229088 0.06034372 0.04296553 0.1441267 0.3037592 0.6985894 0.3424855

4 0.02211413 0.06289556 0.04265936 0.1493579 0.3175088 0.7124582 0.3477512

5 0.02311490 0.05985614 0.04618947 0.1392655 0.2849417 0.6763379 0.3314924